In a recent webinar moderated by Mark Tomlinson, Gene Kim shared the top insights he discovered while co-authoring The DevOps Handbook and Andi Grabner shared lessons learned from Dynatrace’s own DevOps transformation. What follows in this eBook is an excerpt from that live interview session.

What are the common characteristics of companies that have successfully adopted DevOps?

Top performers are more agile, more reliable, and more secure and controlled.

What I love the most about our new book is that it has 48 case studies and 503 endnotes. That shows just how many organizations’ we were able to chronicle for the book.

Out of that and with the work that I did with Jez Humble and Dr. Nicole Forsgren in conjunction with Puppet, we have benchmarked over 26,000 organizations. This process has led us to identify some clear, common characteristics of the top DevOps performers. They…

How is the creation of business value different in the DevOps environment?

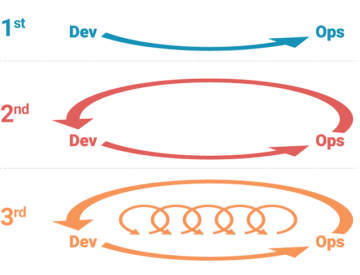

The “Three Ways” that DevOps creates business value

I’m going to "steal" Gene Kim’s framework from his first book, The Phoenix Project, for this discussion because it answers this question so nicely.

In the framework, The Three Ways, the 1st Way is about increasing the flow of value through the development pipeline.

We see challenges with this when we first start working with some customers. They typically start out with either a non-existing development pipeline or a pipeline that is broken. It’s very manual and error-prone, and doesn’t have any sense of quality, nor does it have a feedback loop.

There are clear best practices to improve the flow of value in the development pipeline:

- Focus on quality early.

- Don’t just push code out.

- Treat infrastructure as code so you are deploying throughout the pipeline to the same environment as production.

- Break up the monolith into smaller pieces with smaller teams working independently.

When these best practices are put into place, you move from a broken, manual pipeline to an automated pipeline. This is the 1st Way – system thinking and automation – getting stuff from Dev to Ops with a focus on quality.

The 2nd Way is having processes in place to get user feedback, and the 3rd Way is using these feedback loops to foster a culture of experimentation. If you have fast feedback loops and changing the button from the color red to green doesn’t work for customers, it can quickly be fixed without a large impact. With the 3rd Way, you also have the ability to easily remove functionality that nobody needs.

The beauty of DevOps: A real-life example

If you think back not too long ago, when you went on a trip you took pictures... on film!

If you didn’t fill up the film, you took it on the next trip weeks or months later until you had what I like to call a big bang release – it was 24 features in a box.

Then you dropped it off to be developed and you were looking forward to finally seeing the pictures weeks or months later, but sometimes, you got your pictures and realized they weren’t what you expected at all. Something was too dark or over exposed or didn’t develop.

This is the old way, the waterfall way of how a lot of businesses think about delivering value to customers. Now, in the modern way, you use your iPhone. You take pictures one at a time or one feature at a time.

Then you optimize the picture yourself on the phone and finally, seconds later you deploy it into production on Instagram, Facebook, etc. You immediately get feedback from the customer, your friends. They like it or they don’t, and you optimize it or you take it down.

If you do this continuously, this is the benefit of DevOps. You are breaking up deployments into smaller pieces allowing continuous innovation and optimization, allowing people to experiment, and that’s just the beauty of it.

What are your favorite DevOps transformation stories?

How GWS created a culture of testing excellence

There are two stories from our research on the book that I find to be most powerful. The first one is the Google Web Services (GWS) transformation.

Google has an amazing testing culture. The use of automated testing, after all, is a predictor of which organizations are top performers. However, in 2005, deploying to Google.com was actually a very dangerous proposition. People did it all the time, but it was really sort of the dumping ground where all developers put their poorly tested code, and then went to sleep. There were incorrect search results and availability failures.

Mike Bland with GWS finally said, "No more." He required developers to accompany code with automated testing or it could not be checked in.

Within a year, the GWS team became the most high-performing team at Google in terms of being able to deploy code quickly and seamlessly, and safely into the production environment.

One of my favorite quotes from Mike Bland is – "People were afraid to deploy to Google.com especially when you are a new employee because you didn’t understand it at all, but it was also prevalent in people who understood it all too well. Fear became the mind killer."

Three to five years later, that culture of testing excellence was adopted across the Google enterprise, and the technique that they used was called TOTT (testing on the toilet). They actually posted flyers inside every bathroom stall showing "Here’s how we can help you with automated testing." This successfully led to the propagation of those practices throughout the Google organization.

Now they are doing 5500 code deployments per day. They’re running hundreds of millions of test cases daily. I love this quote from Eran Messeri at Google, "It is only through automated testing that we can transform fear into boredom."

Right? It’s only through safety that experimentation can happen.

How GWS created a culture of testing excellence

At Etsy, every time they wanted to implement a piece of business logic change, there would be two teams involved. There would be the front-end developers making changes in the PHP code and then the DBAs making changes to the stored procedures. Their vision was to allow each team to work more independently of each other so they created this thing called Sprouter, a storage procedure router.

The idea was that the DevOps would work independently of the DBAs, and they would just meet in the middle in the Sprouter technology. The problem was that before, they needed two teams to work in parallel and now, they needed three. That level of coordination is rarely achieved. Almost every deployment was a mini outage.

Their countermeasure was to actually get rid of Sprouter. Instead, they built an object- relational model so that developers could directly make changes to the database. They could add and remove changes without having to deal with the DBAs.

They went from two teams up to three then down to one. The result was that lead time went down. The amount of communication and coordination required went down. Reliability went up.

I thought that this is just an amazing example of Conway’s Law at work, showing how it can hurt for two teams to go three and how it can help by allowing developers to self- develop, self-test, and self-deploy. It’s a huge predictor of outcome, if you want fast flow and feedback, to have the responsibility of all the aspects of service in one group. For me, that was probably one of the biggest findings from the case studies in the book.

How was DevOps used to transform Dynatrace?

Why Dynatrace had to change

We had to change the way we developed software because our market is going through a disruption.

Back in 2011, we began seeing a lot of Dynatrace customers migrate away from only on-premise to VM, Cloud, Containers, PaaS, and other new technologies. We also saw the architecture change. Instead of the monolith, we saw people building microservice applications, on-demand scaling, etc.

We began seeing many Cloud Native companies start up. Uber for instance, is a Cloud Native company. They say "Well, we don’t do on-premise. We just want to have everything SaaS-based."

The digital transformation also changed the way people think about monitoring measurement and analytics. It was no longer using monitoring just for CPU memory and disk. It was also looking at the performance impact to user behavior and getting those answers over to the line of business.

This was the way 2011 was for us. We’d been good in the market, but we knew we needed to be able to make changes quickly to remain a leader. We also had to bridge the gap between the New Stack and enterprise technology.

Our customers were building in the Cloud using Angular, using NodeJS, using the latest technology and PaaS, and combining it with the traditional enterprise stack technology. This means applications still running on Java WebSphere talking to the mainframe through message brokers, but using New Stack apps in front of all that. We needed to be sure our monitoring tools could keep up with these environments.

Dynatrace’s move to DevOps

To solve the problem, we set out a mission statement for our SaaS offering.

Instead of going from a release every six months, we wanted to be able to go from code to production in an hour. That’s continuous delivery.

In 2016, we achieved all of the things we set out to do. We now do 26 major releases per year and 170 production deployments, and this was only possible by using DevOps principles – breaking down the monolith, shifting performance left to the beginning of the development pipeline, etc.

We learned a lot when we developed the SaaS-based solution that we also applied to our on-premise solution. We also broke down that big monolith into smaller components implementing features like automated rollback.

Another place we had to change was education. We used to do an education release 60 to 90 days after the product release. Obviously, it didn’t make sense with our new frequency of releases so we had to figure out how to include our education department into our continuous delivery sprints. We also found new ways of delivering content – Dev blogs, YouTube videos, etc.

And finally, we made changes to testing and development to accommodate the New Stack technologies such as NodeJS, Angular, Docker, and Red Hat OpenShift Container Platform. We had to make sure our support teams could run these technologies to validate support tickets and that engineering teams had easy access to these technologies. That’s why we went to an infrastructure as code model where anybody can get these environments on-demand.

What was it like to go through this process internally at Dynatrace?

Three tips

These three tips made our lives at Dynatrace much easier.

#1 Increase sprint quality

We had a two-week Sprint already, but what we changed was that Sprint reviews could only be done on what we call DynaSprint. We have two systems. One, DynaDay, is where we deploy daily builds, and the second, DynaSprint is where we deploy the sprint build.

In Sprint reviews, it was not acceptable if a developer showed a feature on the Dev machine. That would only mean it works on the Dev machine, but it doesn’t prove that it works in the sprint environment.

We also deployed the sprint builds into our internal production environment, which means we monitor our own services and websites. Whether it’s the website, our support system that runs on JIRA, our community on Confluence or another system, we monitor it with Dynatrace.

When we started deploying the sprint builds in these environments and our build broke something, everybody in the organization immediately felt the pain. It was amazing, actually. Everyone was in it together.

#2 Raise awareness of pipeline quality

Here, we developed our own dashboard visualization of the pipelines builds from the different teams. We also introduced what we call the Pipeline State UFO. It’s this funky little thing that you see hanging from the office ceiling. This is basically a very easy sprint status visualization for everybody in the office.

If you’re a developer and you have checked in your code and you walk over to the coffee machine while your build gets executed, you can see if something breaks and the light goes from green to red. You get immediate feedback.

#3 Build in feedback loops

In SaaS-based environments, it’s easier to build in feedback loops. You can monitor your end users to figure out who is using which features. We also applied some of the lessons to our on-premise product.

We monitor which features are used on our on-premise product. Then, we can say, "Look at this. We always thought, because our sales told us, that this feature is needed by everyone out there. We build the feature and guess what? Nobody is using it," or "Look at this. This feature is used by everybody, but we have a lot of support tickets on it. Maybe we actually need to optimize it."

What is different about this current chapter of the DevOps adventure?

DevOps isn’t just for the unicorns

We found that DevOps isn’t just for the unicorns. For the past few years, we’ve held the DevOps Enterprise Summit. It is a conference for horses, those complex enterprises that have been around for decades.

It’s for organizations like Western Union that has been around for 162 years and Barclays which was founded in 1634, and not the unicorns like Google, Amazon, Facebook, etc.

At the last conference, we heard from quite of few of those horses:

- Target created a next generation system of record, an API that worked on demand. Any Dev team could add, change, and remove that information. It enabled 53 new business initiatives in three years, and they went to 100+ deploys per week and less than 10 incidents per month.

- Capital One created an internal shared service spanning the development pipeline. It enables hundreds of deploys per day with a lead time of minutes.

- Macy’s now runs 100Ks automated tests daily.

- Disney has embedded nearly 100 Ops engineers into line of business teams across enterprise.

- Nationwide insurance uses DevOps for its legacy state pension fund retirement application – a COBOL system on mainframe.

This all required a certain degree of courage to make these changes in organizations that are not Google or Amazon.

In summary, Dr. Steven Spear talks about the four capabilities of market leaders in his book, The High Velocity Edge. We’ve recognized these capabilities time and again in the top performers we interviewed:

- See problems as they occur – The leaders are doing this through technologies such as automated testing and APM.

- Swarm and solve problems to create new knowledge – Problems that are seen are solved so that new knowledge is built quickly.

- Spread new knowledge throughout the organization – Knowledge cannot be locked up in the organization. The leaders turn local discoveries into global improvement.

- Leading by developing – The job of leaders is not to command and control, but to create other capable leaders who can perpetuate this system of work.

Resources

We hope you learned something new from the interview with Gene Kim and Andi Grabner. No matter where your organization is on its DevOps journey, there is always an opportunity to learn more. Below are a few resources to support you on that journey.

The blogroll

Recommended reading

- Continuous Delivery by Jez Humble and David Farley

- The DevOps Handbook by Gene Kim, Jez Humble, Patrick Debois and John Willis

- The Goal by Eliyahu Goldratt

- The High Velocity Edge by Steven Spear

- The Phoenix Project by Gene Kim, Kevin Behr, and George Spafford

- Release It by Mike Nygard

- The Other Side of Innovation by Vijay Govindarajan

About the Authors

A Leader in the 2025 Gartner® Magic Quadrant™ for Observability Platforms

Read the complimentary report to see why Gartner positioned us highest for Ability to Execute in the latest Magic Quadrant.

This graphic was published by Gartner, Inc. as part of a larger research document and should be evaluated in the context of the entire document. The Gartner document is available upon request from Dynatrace. Dynatrace was recognized as Compuware from 2010-2014.

Trusted by thousands of top global brands

Try it free

Additional Ebooks

AI-Powered Observability for Financial Services IT Leaders

Business Observability in Action: Dynatrace eBook

Outpacing the Legacy Trap: Dynatrace’s Blueprint for Advancing Observability with Bank of America

AI adoption starts with observability

6 challenges enterprise face and how Dynatrace solves them

Attack Surface Checklist for Cloud & Kubernetes

The importance of business observability for enhanced success

The Developer’s Guide to Observability

eBook: Maximize business outcomes with modern log management

Beyond monitoring: Analyze, automate, and innovate faster with AI-powered observability

State of Observability 2025: The control plane for AI transformation

The impact of digital experience on the business

The Dynatrace Approach to Kubernetes

Five strategies to reduce tool sprawl

Kubernetes in the Wild report 2025: New trends

Why the cloud needs observability

Kubernetes platform observability best practices guide

Use Grail to ingest and analyze logs at scale

Answers to the top 6 privacy and security questions

How to make technology your strategic advantage

5 infrastructure monitoring challenges and their impact

6 Best Practices for upgrading from Dynatrace Managed to SaaS

Redefining the customer journey with application optimization

Delivering on the potential of application optimization

5 trends shaping the future of digital transformation

Fighting tool sprawl with unified observability