As a result, every business needs to embrace software as a core competency to ensure survival and prosperity. However, the transformation into a software company is a significant task, as building and running software today is harder than ever. And if you think it’s hard now, just wait. Your journey has just begun.

Speed and scale: a double-edged sword

You invested in an cloud solution like AWS to build and run your software at a speed and scale that will transform your business—that’s where cloud platforms excel. But are you prepared for the complexity that comes with speed and scale?

As software development transitions to a cloud-native approach that employs microservices, containers, and software-defined cloud infrastructure, the immediate future will bring more immense complexity than the human mind can envision.

You also invested in monitoring tools—lots of them over the years. But your traditional monitoring tools don’t work in this new dynamic world of speed and scale that cloud computing enables. That’s why many analysts and industry leaders predict that more than 50% of enterprises will entirely replace their traditional monitoring tools in the next few years.

What killed traditional monitoring?

Which brings us to why we’ve written this guide. Your software is important, and choosing the right monitoring platform will make speed and scale your greatest advantage instead of your biggest obstacle.

Your industry peers helped us discover our insights and conclusions

Dynatrace works with the world’s most recognized brands, helping to automate their operations and release better software faster. We have experience monitoring the largest cloud-hosted implementations and assisting enterprises as they manage the significant complexity challenges of speed and scale. Some examples include:

- A large retailer with 100,000+ hosts managing 2,000,000 transactions a second

- An airline with 9,200 agents on 550 hosts capturing 300,000 measurements per minute and more than 3,000,000 events per minute

- A large health insurer with 2,200 agents on 350 hosts, with 900,000 events per minute and 200,000 measures per minute

At Dynatrace, we experienced our own transformation—embracing cloud, automation, containers, microservices, and NoOps—and now we’re prospering, while other vendors who haven’t transformed are left behind. But don’t just take our word for it. Forrester recognized our achievement and shared our story with the broader IT community to help them transform in the same way we have. You’re welcome.

At Dynatrace, we experienced our own transformation—embracing cloud, automation, containers, microservices, and NoOps. We saw the shift early on and transitioned from delivery software through a traditional on-premise model to the successful hybrid-SaaS innovator it is today. Read the Game changing – From zero to DevOps cloud in 80 days-brief to learn more, too.

Hybrid cloud is the norm

Insight

Enterprises are rapidly adopting cloud infrastructure as a service (IaaS), platform as a service (PaaS), and function as a service (FaaS) to increase agility and accelerate innovation. Widespread cloud adoption has made hybrid cloud the norm. According to RightScale, 81% of enterprises currently execute a cloud strategy.

Challenge

The result of hybrid cloud is bimodal IT—the practice of building and running two distinctly different application and infrastructure environments. Enterprises must continue to enhance and maintain existing, relatively static environments while also building and running new applications on scalable, dynamic, software-defined infrastructure in the cloud.

Key consideration

Simplicity and cost savings drove early cloud adoption, but today, cloud use has evolved to a complex and dynamic landscape.

The ability to seamlessly monitor the full technology stack across clouds, while also monitoring traditional on-premise technology stacks is critical to automating operations — no matter what the distribution level of the applications and infrastructure being monitored.

Hybrid cloud

As enterprises migrate applications to the cloud, or build new cloud-native applications, they also maintain traditional applications and infrastructure. Over time, this balance will shift from the traditional tech stack to the new stack, but both new and old will continue to coexist and interact.

Microservices and containers introduce agility

Insight

Microservices and containers are revolutionizing the way applications are built and deployed, providing tremendous benefits in terms of speed, agility, and scale. In fact, 98% of enterprise development teams expect microservices to become their default architecture—and IDC predicts that 90% of all apps will feature microservices architectures by 2022.

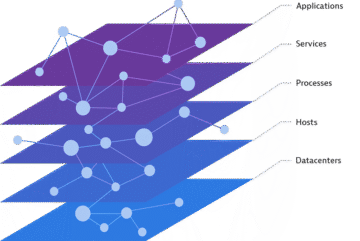

Challenge

According to 72% of CIOs, monitoring containerized microservices in real-time is almost impossible. Each container acts like a tiny server, multiplying the number of points you need to monitor. They live, scale, and die based on health and demand. As enterprises scale their environments from on-premise to cloud, the number of dependencies and data generated increase exponentially, making it impossible to understand the system as a whole.

The traditional approach to instrumenting applications involves the manual deployment of multiple agents. When environments consist of thousands of containers with orchestrated scaling, manual instrumentation becomes impossible and will severely limit your ability to innovate.

Key consideration

A manual approach to instrumenting, discovering, and monitoring microservices and containers will not work. For dynamic, scalable platforms, a fully automated approach to agent deployment, continuous discovery of containers and monitoring of the applications and services running within them is mandatory.

— Dynatrace CIO Complexity Report 2018

Not all AI is equal

Insights

Gartner predicts that 30% of IT organizations that fail to adopt AI will no longer be operationally viable by 2022. As enterprises embrace a hybrid cloud environment, the sheer volume of data created, and the growing environmental complexity, will make it impossible for humans to monitor, comprehend, and take action. The need for machines to solve data volume and speed challenges resulted in Gartner creating a new category, called “AIOps” (AI for IT Operations).

Challenge

AI is a buzzword across many industries and making sense of the marketing noise is difficult. To help, here are three key AI use cases to keep in mind when considering how to monitor your cloud platform and applications:

Many enterprises are trying to address these use cases by adding an AIOps solutions to the 25+ monitoring tools they already have. While this approach may have limited benefits, such as alert noise reduction, it will have minimal effectiveness addressing the root cause analysis and auto-remediation use cases as it lacks contextual understanding of the data to draw meaningful conclusions.

You will also find there are many different approaches to AI. Here are a few of the more popular ones you are likely to encounter as you move towards an AIOps strategy:

Key consideration

Not all AI is created equally. Attempting to enhance existing monitoring tools with AI, such as machine learning and anomaly-based AI, will provide limited value. AI needs to be inherent in all aspects of the monitoring platform and see everything in real-time, including the topology of the architecture, dependencies, and service flow. AI should also be able to ingest additional data sources for inclusion in the AI algorithms vs. correlating data via charts and graphs.

— Gartner

DevOps: Innovation’s soulmate

Insight

DevOps is perhaps the most critical consideration when maximizing the value of cloud technologies. Implemented and executed correctly, DevOps enhances an enterprise’s ability to innovate with speed, scale, and agility. Research shows that high performing DevOps teams have 46x more frequent code deployments and 440x faster lead time from commit to deploy.

Challenge

As enterprises scale DevOps across multiple teams, there may be hundreds or thousands of changes a day, resulting in code pushes every few minutes. While CI/CD tooling helps mitigate error-prone manual tasks through automated build, test and deployment, bad code still has the propensity to make it into production. The complexity of highly-dynamic and distributed cloud environments like Pivotal Cloud Foundry, along with thousands of deployments a day, will only increase this risk.

As the software stakes get higher, shifting performance checks left — that is, earlier in the pipeline — enabling faster feedback loops becomes critical. But this can’t be accomplished easily with a tool approach to monitoring. To be effective, a monitoring solution needs to have a holistic view of every component, and a contextual understanding of the impact each change has on the system as a whole.

Check to see which DevOps tooling a monitoring solution integrates with and supports and consider how it will impact your ability to automate things in the future.

Key consideration

To go fast and not break things, automatic performance checks need to happen earlier in the pipeline. To achieve this, a monitoring solution should have tight integration with a wide range of DevOps tooling. Combined with a deterministic AI, these integrations will also help support the move to AIOps and enable auto-remediation to limit the impact of bad software releases.

Digital experiences matter

Insight

Enterprises strive to accelerate innovation without putting customer experiences at risk. However, it’s not just end-customer web and mobile app experiences that are at risk. Apps built in the cloud support a much broader range of services and audiences, including:

- Wearables, smart homes, smart cars and life-critical health devices that have rapidly developed since the consumerization of IT.

- Corporate employees working remotely who need access to systems that are in the corporate datacenter and also cloud-based.

- Office-based employees who rely on smart features for lighting, temperature, safety, and security that depend on machine-to-machine (M2M) communications and the Internet of Things.

What was simply regarded as user experience has evolved and grown to digital experience encapsulating end-users, employees and things.

Challenge

Enterprise IT departments face mounting pressure to accelerate their speed of innovation, while user expectations for speed, usability, and availability of applications and services increase unabated. Combined with the explosion of IoT devices and the increasingly vast array of technologies involved in managing, and optimizing digital experiences while embracing high frequency software release cycles and operating complex hybrid cloud environments, presents significant challenges.

If digital experiences aren’t measured how can enterprises prioritize and react when problems occur? Are they even aware there are problems? And if experiences are quantified, is it in context to the supporting applications, services, and infrastructure that permit rapid root-cause analysis and remediation? Only enterprises able to deliver extraordinary digital customer experiences will stay relevant and prosper.

Key consideration

Enterprises need confidence that they’re delivering exceptional digital experiences in increasingly complex environments. To achieve this, they require real-time monitoring and 100% visibility across all types of customer-, employee-, and machine-based experiences.

Simplify cloud complexity and accelerate digital transformation on AWS

Out-of-the-box, Dynatrace works with Amazon EC2, Amazon Elastic Container Service, Amazon Elastic Kubernetes Service, AWS Fargate and serverless solutions like AWS Lambda

Start AWS monitoring in under five minutes

A Leader in the 2025 Gartner® Magic Quadrant™ for Observability Platforms

Read the complimentary report to see why Gartner positioned us highest for Ability to Execute in the latest Magic Quadrant.

This graphic was published by Gartner, Inc. as part of a larger research document and should be evaluated in the context of the entire document. The Gartner document is available upon request from Dynatrace. Dynatrace was recognized as Compuware from 2010-2014.

Trusted by thousands of top global brands

Try it free

Additional Ebooks

AI-Powered Observability for Financial Services IT Leaders

Business Observability in Action: Dynatrace eBook

Outpacing the Legacy Trap: Dynatrace’s Blueprint for Advancing Observability with Bank of America

AI adoption starts with observability

6 challenges enterprise face and how Dynatrace solves them

Attack Surface Checklist for Cloud & Kubernetes

The importance of business observability for enhanced success

The Developer’s Guide to Observability

eBook: Maximize business outcomes with modern log management

Beyond monitoring: Analyze, automate, and innovate faster with AI-powered observability

State of Observability 2025: The control plane for AI transformation

The impact of digital experience on the business

The Dynatrace Approach to Kubernetes

Five strategies to reduce tool sprawl

Kubernetes in the Wild report 2025: New trends

Why the cloud needs observability

Kubernetes platform observability best practices guide

Use Grail to ingest and analyze logs at scale

Answers to the top 6 privacy and security questions

How to make technology your strategic advantage

5 infrastructure monitoring challenges and their impact

6 Best Practices for upgrading from Dynatrace Managed to SaaS

Redefining the customer journey with application optimization

Delivering on the potential of application optimization

5 trends shaping the future of digital transformation

Fighting tool sprawl with unified observability