Synthetic testing simulates real-user behaviors within an application or service to pinpoint potential problems. Here’s a look at why this testing matters, how it works, and what companies need to get the most from this approach.

What is synthetic testing?

Synthetic testing is an IT process that uses software to discover and diagnose performance issues with user journeys by simulating real-user activity. Also called continuous monitoring or synthetic monitoring, synthetic testing mimics actual users’ behaviors to help companies identify and remediate potential availability and performance issues.

For example, teams can program synthetic test tools to send large volumes of simultaneous resource requests to a new application and evaluate how well it responds. Are all requests met in a reasonable amount of time? If not, what was the median increase over baseline response times? Did these requests impact users’ overall application experience? How?

By understanding how an app or service may respond in real-world conditions, teams can better pinpoint potential problems and fix these issues before live deployment or before users are affected. Along with real user monitoring (RUM), synthetic testing provides a comprehensive view into the user experience to ensure software meets user requirements.

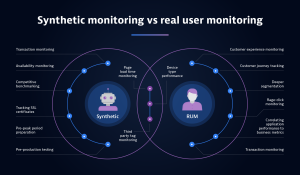

Synthetic testing vs. real user monitoring

Both synthetic testing and real user monitoring (RUM) play a role in application development. The biggest difference between synthetic testing and RUM is synthetic simulates real-world scenarios, whereas RUM measures actual real-user behavior.

Another difference is where they happen in the development life cycle. Because synthetic tests don’t require a live environment, teams can conduct them in production or test environments to assess overall performance or the performance of a specific application component. In addition, these tests’ simulated nature requires minimal resource overhead, meaning teams can run them continuously.

RUM, meanwhile, requires actual users. As a result, it can only be conducted once applications or services are live. While this makes RUM more limited in scope, it doesn’t negate its value. Synthetic tests attempt to mimic both common and uncommon user behaviors, but even the best simulations are no substitute for the real thing. Users often interact with technologies in unpredictable ways that could reveal previously unknown issues.

Put another way, users may act out of frustration in response to a set of circumstances — such as “rage clicking” if an application doesn’t respond fast enough. Synthetic tools, meanwhile, can only simulate frustration. While teams can program them to rage click after a certain point or even randomly, they can never truly replicate the real-user experience.

As a result, organizations benefit from a mix of RUM and synthetic tests.

Types of synthetic testing

There are three broad types of synthetic testing: availability, web performance, and transaction. Each offers its own strengths and abilities for different needs.

Availability testing

Availability testing helps organizations confirm that a site or application is responding to user requests. It can also check for the availability of specific content, or if specific API calls are successful.

Web-performance testing

Web-performance testing evaluates metrics including page loading speed, the performance of specific page elements, and the occurrence rate of site errors.

Transaction testing

Transaction testing sees robot clients attempting to complete specific tasks, such as logging into accounts, filling in an on-site form, or completing the checkout process.

How synthetic testing works

Synthetic testing works by using a robotic client application installed on a browser, mobile device, or desktop computer. These applications send a series of automated test calls to a service or application, which simulate a user’s “clickstream,” or the actions they take while on the site.

Consider a synthetic test designed to evaluate an e-commerce shopping application. First is a test of the home screen. Does it open quickly and consistently with no visual artifacts? Next, the synthetic test links to product pages. It then moves on to shopping carts, shipping rates, and, finally, a simulated purchase. The robotic client application reports if any of these transactions fail or perform too slowly, allowing companies to make changes before services go live.

When designing synthetic tests, it’s worth using a combination of browser-based, mobile, and desktop tests to assess application performance. This is because each of these traffic vectors comes with unique challenges. For example, even if response times are consistent across all testing environments, mobile users could still experience issues with automatic screen size scaling or application design choices that favor desktop users.

Requirements for synthetic testing

To create a reliable testing environment, several components are critical.

Well-defined goals

Effective synthetic testing depends on well-defined goals. Organizations need to identify what they’re trying to measure before they write and deploy test scripts. Then, it’s ensured the data captured is relevant to these goals.

Customizable robot clients

Robot clients are the foundation of synthetic testing. But in the same way that testing needs to evolve over time, organizations need client components that can be configured to keep pace with change as their on-premises, cloud, or hybrid environments evolve.

Comprehensive synthetic testing tools

To effectively evaluate and optimize digital experiences, organizations need synthetic testing tools that cover the major user touchpoints with their environments. At a minimum, a synthetic monitoring program should include the following tools:

- Single-URL browser monitors. Single-URL browser monitors simulate a user’s visit to a site using an up-to-date web browser. By consistently running single-URL browser testing from both public and private sources, organizations can ensure that sites maintain an established baseline performance.

- Browser clickpaths. Browser click-path testing monitors specific click sequences through critical application workflows. Running browser click paths regularly can ensure that specific function sequences remain available and high-performing.

- HTTP monitors. HTTP monitors use simple HTTP requests to ensure that specific application programming interface (API) endpoints and website resources are available.

In addition to running these tests regularly, organizations should ensure their synthetic testing tools follow basic security best practices. For example, a synthetic testing tool should not send requests to local hosts or default IP addresses.

Synthetic testing best practices

A few best practices can help organizations achieve reliable, actionable data from synthetic testing tools. The following are three strategies worth considering.

1. Reduce setup complexity

The level of application integration required for synthetic solutions to collect key data can lead to complexity. To help mitigate this risk, clearly define your goals before setting up tests to streamline configuration and leverage tools designed to simplify the process with visual or script-based setups.

2. Prioritize continual monitoring

Continual monitoring is critical for organizations to understand how applications and users interact over time. It also plays a key role in addressing the issue of test fragility. Even small changes to the user interface — such as removing or replacing a button — can cause tests to fail. Continual monitoring allows organizations to detect and fix these failures immediately.

3. Keep context front and center

Testing doesn’t drive action without context. While many synthetic tools provide data about what’s happening when users interact with applications, they don’t answer the question of why. Keeping context front and center with synthetic testing integrated into an end-to-end observability platform provides complete visibility without adding additional tools.

Synthetic testing as part of a unified observability strategy

To make synthetic testing easy to develop and maintain, it should be part of a wider observability strategy. By taking a unified platform approach to observability, organizations can integrate synthetic testing into their overall application performance efforts.

Dynatrace synthetic monitoring provides continuous and on-demand answers to questions about application performance, reliability, and the overall user experience. By combining this approach with Dynatrace RUM, it’s possible to capture the full range of customer behavior, from common clickstreams to unexpected actions that may suddenly tax resources or lead to strange application behavior.

Dynatrace helps organizations proactively evaluate the performance of applications no matter where they are in the development cycle. The result is enhanced application performance and improved user experience that keeps customers coming back.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum