In part one, we introduced the concept of intellectual debt, defining it as the gap between what works and our knowledge of why it works. As is often the case with technical debt, some amount of intellectual debt is acceptable, even necessary.

You likely have personal stories of accidental successes, of solutions without theory; here’s one of mine. I was helping a customer with an evasive intermittent session drop problem. Well into the third week of analysis, the network admin changed a security parameter in the firewall—to address an unrelated issue—and you can guess what happened: the session drop problem disappeared. Not understanding why the change worked didn’t stop the customer from applying it—based on this new tribal knowledge—to address the problem at their other sites.

We also made the point that machine learning systems can improve IT efficiency, speeding analysis by narrowing focus. But with autonomous IT operations on the horizon, it’s important to understand the path to intellectual debt and its impact on both efficiency and innovation.

Let’s look at a few simple examples on how intellectual debt might begin to accrue.

Intermittent problems have always been challenging—to capture, to reproduce, to analyze. The nature of intermittent problems also makes it difficult to know whether a remedy has worked. What if an implemented change doesn’t fix the problem? Do you reverse the change? Or leave it and try the next, then the next? Will implementing multiple changes lead to unforeseen problems in the future?

For machine learning systems, intermittent problems are particularly challenging. Without consistency in the data, the system needs to look harder for possible causes. The search for answers must move beyond highly confident correlation and towards statistically less-significant patterns. Like humans, machine learning systems arrive at answers with varying degrees of confidence; unlike humans, they aren’t very good at discarding silly answers triggered by spurious correlation. As the data pool becomes larger, more dynamic, and less homogeneous, these spurious correlations are more likely to occur. At the same time, by relying on opaque machine learning-sourced answers—answers without logical explanations—your teams continue to accrue intellectual debt, reducing their ability to notice these misfires.

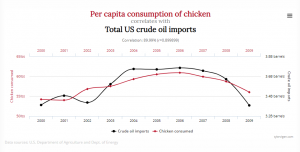

Tyler Vigen has collected a series of spurious (and quite humorous) correlations here. I’ve picked one. Can you guess what a machine learning system might suggest if the goal here were to sell more chicken?

Small-scale projects offer a tempting proving ground for machine learning, with little business risk as you evaluate the system’s capabilities. But these environments rarely have a chance to expose the limitations that lead to intellectual debt. In a pilot project, the system will likely be analyzing a narrow set of technologies, processing data that’s typically static and confined when compared with the dynamism of full production environments. The result? The accuracy of the machine learning system’s answers will be artificially inflated. At the same time, the domain experts conducting the pilot are likely able to invent plausible logic to validate the machine learning’s simple correlations.

But consider these questions:

- The system can’t validate itself. It can’t explain its findings, or how it got there. As you move from pilot to production, how will you validate the system’s answers?

- Will you be satisfied with early successes, losing interest in the underlying logic and theory necessary for validation?

- How will you prepare for the times the system fails to provide an answer to a problem?

These questions expose the cause of the intellectual debt problem—the lack of explanations – and a common path to accruing more debt—complacency.

Imagine that the machine learning system works well enough to change how you focus your IT resources, shifting from in-depth analysis towards efficient remediation. Your team’s skilled analysts get reassigned to more strategic endeavors, along with their collective experience. The remaining team members quickly adapt to the new normal, caring less and less about system interactions and performance theory since analytics are now the realm of the machine learning system.

These are the early markings of accelerating intellectual debt.

Simplistic fault analysis refers to an unnecessarily superficial approach to problem solving. Let’s say the machine learning system suggests—among a handful of potential answers—that you should consider adding an additional server. Easy enough to accomplish in the cloud, you find that the problem goes away. Great! What if the root cause were not CPU resources, but a problem with serial threaded code, or with a database lock, or with inefficient garbage collection? You’ve only masked it by adding another CPU, and soon you may be faced with adding another, and another, and so on. You’re throwing resources—and money—at a problem without addressing the root cause.

Here’s a creative—let’s call it a hypothetical, even far-fetched—scenario. What if, instead of just crude or sloppy machine learning algorithms, there were more sinister forces at play, personified by a rogue data scientist? One could imagine a scheme to artificially promote machine learning suggestions to increase EC2 processing or bandwidth resources, where an anonymous beneficiary might skim pennies from each instance hour. Biasing these algorithms isn’t difficult, although we can believe these will be mostly accidental and not malicious. But without clear accounting of the reasoning behind the answers, these biases or algorithmic errors will become all but impossible to detect.

Finally, the machine learning war room conundrum takes us back in time to the not-so-good old days. While a “perfect” machine learning system promises to eliminate the war room, these “perfect” systems don’t exist; they can’t. Even under the best conditions, there will be cases where confidence in the machine learning system’s list of potential answers will be too low to take action.

Unwilling to risk rolling the dice, the various operations teams seeks greater confidence—the old-school way. In the war room, however, it’s quickly evident that the teams have become rusty, unsure of how the application works, or the meaning of some of the many metric charts at their disposal. Since the system can’t explain its conclusion through cause and effect, teams are left attempting to recreate the logic that led the system to each independent conclusion. The real possibility exists that the war room efforts will result in a completely different set of answers. Unable to prove—or disprove—the system’s conclusions, which route would you take?

The war room is hampered by growing intellectual debt as teams lose tribal knowledge and experience the slow dulling of their analytic skills, in deference to machine learning’s easy answers.

These simple examples suggest ways in which intellectual debt accumulates, and how it can partially counteract the efficiency gains delivered by machine learning. But IT efficiency, while important, isn’t the real story here. Intellectual debt limits our ability to grasp the inherent inaccuracies in machine learning systems, ultimately stifling the innovation that will be required for tomorrow’s autonomous IT operations.

From the tactical to the strategic, intellectual debt—left unchecked—will be costly. Like technical debt, making good decisions early can help you avoid accruing interest. In our final blog in this three-part series, we’ll revisit the contrast between machine learning and artificial intelligence systems that we hinted at in part one.

Further reading

Part 1: Intellectual debt: The hidden costs of machine learning

Part 3: Could intellectual debt derail your journey to the autonomous cloud?

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum