In Java Land you have servlet containers like Tomcat and Jetty to serve your web applications. But with recent non-Java approaches like Node.js and Go providing their own built-in servers, you might conclude that built-in servers are the way to go for Java as well. This post explores why servlet containers are built the way they are and why there’s little reason to use them, at least not in the way they were originally intended.

A bit of history

When Apache Tomcat (then Jakarta Tomcat) was introduced in 1999, the Internet and its tools were not as sophisticated and specialized as they are today. So servlet containers were designed to cover a much different field of use cases than is relevant today.

Servlet containers were intended to be high-availability components that host web applications. While this may have been a great idea at one point, it introduced a few problems, like memory leaks and class-loader problems.

Where we are today

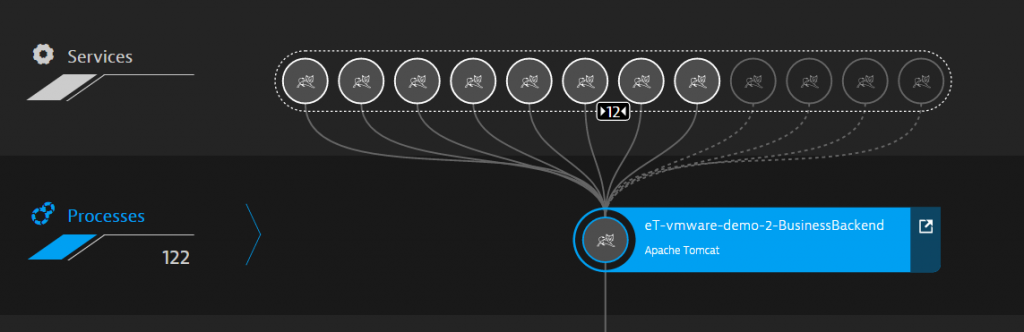

Modern application environments typically consist of multiple services. Regardless of whether you’re running a monolithic application or you’re heading towards a microservices-based architecture, I’m sure you’ll agree that availability is an absolute necessity for all web-based content, particularly if you’re running an online business. Even though availability has been desirable even as far back as 15 years ago (it used to be common to deploy changes in the middle of the night when traffic was minimal and downtime was less of an issue), we never before faced the performance expectations that customers have today.

The unmatched simplicity of containers

If you’re running a monolithic web application you may be tempted to stick to standalone instances of Jetty, Tomcat, WildFly, or whatever because they’re easier to use. For example, containers usually offer an integrated web interface for deploying new .war files and serving basic monitoring needs.

However, when viewed from a critical perspective, servlet containers are just additional components that require deployment, configuration, and maintenance. This isn’t necessarily bad though because containers do serve a purpose and any tool that serves a purpose deserves to be deployed, configured, and maintained, right? Well, there’s a problem with servlet containers: the bonds between containers and the services running inside them are just too tight.

Deployment

With containers being “just” components, you also need to consider the careful deployment of container instances. Containers have release cycles, too. In 2015, Tomcat 8 has had roughly eight releases so far. If this trend continues there will likely be another three or four releases later this year.

As all our maintenance tools have their own release cycles, this alone shouldn’t be such a problem. The real problem is that you can’t deploy a new container version without interrupting the services running inside the container.

This highly-dependent relationship between containers and the services they host leaves us with three possible deployment scenarios:

- Deploy new servlet-container, keep current hosted application version

- Deploy new servlet-container, update to latest hosted application version

- Deploy new application version

Two out of these three scenarios usually manifest themselves in the form of multiple build jobs that must be chained or combined accordingly.

With embedded servlet-containers you only have the “deploy new application version” option because the version of the embedded container is defined inside the project’s Maven or Gradle config.

Deploying to a servlet-container is usually dependent on the container version. While you can copy an executable .jar file to your filesystem and execute it, you usually have to follow a container’s deployment API changes to keep your scripts functional. This happened with every major Tomcat release from versions 6 thru 8.

Standalone container advocates tell us that the more applications you run in a container, the less overhead you have. When a container update is required, you’re done once you update the container, as opposed to having to update all the instances running embedded containers. While this is true, it’s not the full story:

When an application wreaks havoc inside a container, it can easily impact everything else running in the container. A single failing service impacting other services that aren’t even directly related isn’t something you want—when problems occur this leads to completely unpredictable behavior in your environment.

Besides the operational aspects of deploying and maintaining standalone containers, I’d like to focus on some numbers now:

Memory

When you use your own hardware, memory is relatively cheap these days. In the cloud, however, pricing is typically bound to CPU and memory usage, and so can get expensive.

If you’re still running on a JVM version prior to 6u23, which was released on Dec 7 2010 (I’m not kidding, people do this), please be aware that 64-bit JVMs require increased memory—on average 30-50% more. Keep in mind, increased Java memory usage leads to increased garbage collection times; so this isn’t just a matter of memory usage—it’s also a matter of performance.

For newer versions, you’re probably safe, as the compressed oops feature resolves this, as mentioned here:

Compressed oops is supported and enabled by default in Java SE 6u23 and later. In Java SE 7, use of compressed oops is the default for 64-bit JVM processes when -Xmx isn’t specified and for values of -Xmx less than 32 gigabytes.

In fact, for best performance and stability in current generation projects, we recommend using 64-bit JVMs over 32-bit ones.

Configuration

Besides configuring applications and services, there’s still the configuration required for the container itself. Ports and logging levels are probably among the most prominent of these settings. While there are libraries that make such configuration as unified and seamless as possible, configuring an embedded container is still the easiest way to go.

Thinking outside the box

Do yourself a favor and Google the phrase “embedded Java web server”. Especially when serving APIs or minor configuration UIs, chances are that you may be able to run a light-weight alternative to a full-blown Tomcat, Jetty, WildFly, or whatever setup.

Summary

When trying to decide if you should stick to embedded containers or not, the evergreen “it depends” response applies. Though I’m convinced that the “it depends” issues are only on the fringes of today’s web’s performance, scalability, and availability standards and aren’t really very important.

Simpler deployment setup, increased efficiency of memory usage, better isolation against independent services, and easier configuration are just a few of the arguments in support of using embedded containers.

Let’s face it, standalone containers were designed to solve problems that we really don’t face today. And frameworks like Spring Boot make it really easy to get started with standalone applications.

Try Dynatrace for free!

Dynatrace offers a great way of visualizing your environment, regardless of whether you’re using standalone servlet containers or not. If you’re interested in how your environment really looks like, take our free trial. It allows you to fully use Dynatrace for 15 days, without commitment.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum