I consistently stand in front of customers wanting the same thing from their performance monitoring toolsets. “We want to be more proactive,” they proclaim in desperation. “We need to have a tool that will automatically alert us to a problem” they demand. “We want to address a problem before it becomes a problem.” This is a constant challenge for Operations. Think about it. How many times have you said, “I will change the oil in my car when the motor locks up and smoke is pouring out of the dash.” Yet this is how companies treat revenue-generating applications that they depend on every day. Pushing features and functionality through the application delivery chain often results in performance problems that are missed, bringing down applications in production.

In this blog, I share the struggle I’ve seen with companies that buy a performance management product in the belief it will make them more proactive. In fact, it requires much more than tools to make the transition from reactive to proactive.

The Status-Quo: Faster in being Reactive – but not yet Proactive

Companies that I deal with have to solve the same problems and questions as described in the intro when it comes to performance management. Let’s have a look at the typical process when pushing a new release into production. Each team has a different way of reacting to problems as fast as possible in case anything goes wrong:

- The Operations Team may use as many as fifteen different tools to watch every aspect of the application. As soon as something is seen amiss everyone springs into action to try and identify the problem. Once the issue is isolated the deployment change is backed out. A feedback loop is started as teams try to determine the root cause.

- The Development Team gets data from Ops but soon demands more as standard log output is not deep enough and they are typically not allowed to get direct access to these machines for debugging. Additional tracing code is hastily written into the application to get more information out to logs. These logs are combed over to try and understand where the problem is really coming from. Once the root cause is isolated new code is written to address it.

- In haste the Testing Team attempts to use load generators to check and see if the problem is really solved. That only works if the problem is able to be recreated in a controlled environment. When a level of confidence is reached, the application is deployed and the cycle begins anew.

This process at its core is the definition of being reactive in practice. It is clear that – even with the best tools and additional resources one can only get faster in being reactive but this won’t help proactively prevent problems.

What does proactive mean to Ops, Test, Dev?

Proactive means different things depending on which team is asked:

- Operations teams feel that getting proactive means having better alerts that are “smarter”. Baselines that quickly adjust for new norms without having to fly blind when new builds are introduced. The problem with this is that it does not make a team more proactive at all. It just makes that team quicker at being reactive.

- Testing teams would like to catch issues earlier but the tools used make it hard to handle the changes coming from the development teams. They ask for a solution to help them manage things from release to release while adapting to new code coming through with very little setup time.

- Development teams are trying to be more agile in their process, moving to a more task-oriented process with more automation in the testing and building of these applications. Visibility into the performance of each build is not the main priority.

Lack of communication and collaboration prevents being proactive

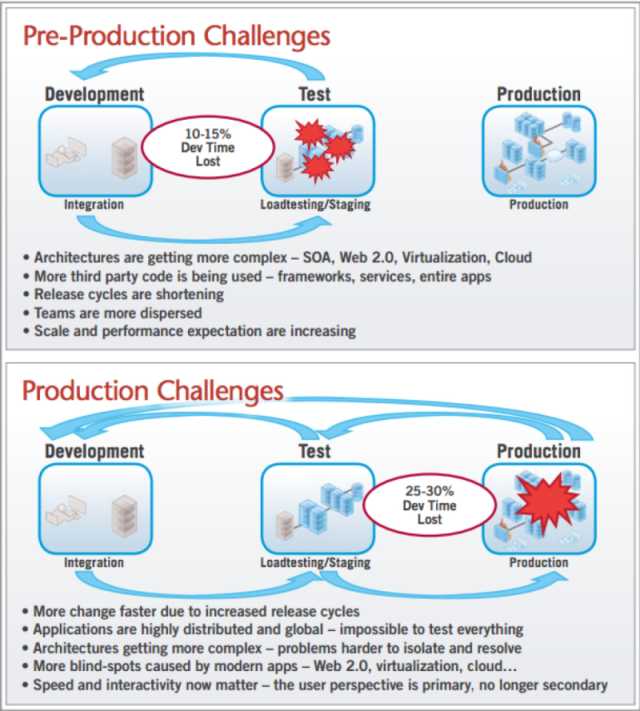

To address the need to be more proactive we have to address the way IT organizations look at managing the performance of applications. Current processes make this hard for one main reason: The lack of communication and collaboration during the lifecycle makes identifying and addressing issues very hard.

Each team has very different drivers and goals that only compound this challenge. Let’s think about the scenario above. The tool sets used by each one of these teams are usually by a different vendor. These tools provide different types of information that are poorly shared with the other teams:

- Operation Teams have an arsenal of tools ranging from infrastructure level tools to home grown solutions in an effort to tease out the most obscure data. All are used in the hopes that performance problems can be identified.

- Testing teams have another set that allows for some level of performance but it is typically for response time analysis of an application under load. With more web 2.0 technology these tools are having a harder time delivering information around edge complexities like 3rd party APIs and toolkits.

- Development Teams, while understanding performance, is primarily testing for functionality and data integrity. The tools used are more to automate the build process and for functional testing and recursion. Unit tests are written to derive that the right response was returned versus how long it took for that response to return.

Each tool provides performance but none of them allow for that data to be shared between teams which leads to problems when communicating and collaborating on performance topics across the lifecycle.

Becoming Proactive requires Process Changes

The Definition of Proactive: creating or controlling a situation by causing something to happen rather than responding to it after it has happened.

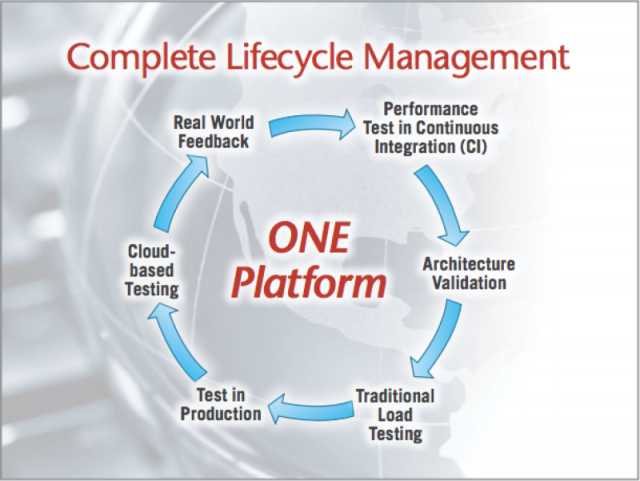

Having smarter alerts just make a team better at not being proactive according to this definition. The only way to become proactive is to address the problem at its source: the Application Delivery Chain. To truly become proactive with your applications you must be in control of them. Teams must come together to cause the application to do what they expect. A change in the process is imperative to make a transition from reactive teams to a proactive ecosystem. The phases of the application cannot be treated as independent isolated steps at moving code from concept to a working product that is generating revenue. It must be looked at as part of a whole. Each team dependent on another working together to bring a system from design to production ready in a timely manner. Take the previously discussed scenario. Had the team’s process been utilizing a unified platform for performance, the outcome would look very different.

So how does an organization begin to address the delivery chain and start to become proactive? IT must put performance as a key metric for delivering quality products for doing business. In order to address this, an IT organization must have a process and platform implemented that facilitates some key features. Those being communication, providing rich understanding of performance details and a single source of truth. This will deliver actionable data to the appropriate teams and empower decisions to be made with that data. Using a single platform gives performance data across all the teams involved while moving applications from the drawing board to the end user. Operations now feeds data back to the development teams for rapid feedback. Once Development addresses performance issues thorough testing can occur. Testing teams can now use real test cases extracted from user transactions to test against the performance fixes. QA now becomes empowered to give the final approval for any release going into production. Now, instead of looking for the smallest hiccup, operations expects normal performance. There comes a level of repeatability and expectation.

Proactive comes when the decision is made to attack the problems at the root before they are in the wild causing consternation for your teams and your customers. This sounds hard to do and in fact, it can be if everyone is not bought into what the overall vision and goal is. Each team needs to understand that they are not an independent group. They are now a part of the overall ecosystem and if one part fails the whole ecosystem fails. Over the next few months we will explore what this process and platform should look like at each phase and ultimately look at the need for a Center of Excellence around performance and a template that can be used to start replicating this process in an IT organization.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum