Dynatrace® named a Leader in 2025 Gartner® Magic Quadrant™ for Digital Experience Monitoring

For the second year running, Dynatrace is named a Leader, and in 2025, positioned furthest for Completeness of Vision.

What is customer experience and why does it matter?

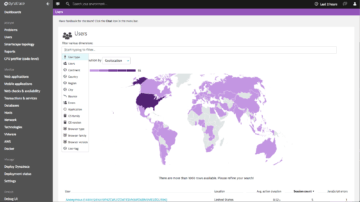

Customer experience is the impression, that your customers and end users have of your brand, including all user interactions with web pages, mobile and web applications or other native/hybrid mobile apps. A positive customer and end user experience is a substantial way to drive revenue and gain customer loyalty. With Dynatrace you have the ability to see all you applications like your customers do. This insight is a key ingredient to stay competitive in today's market.

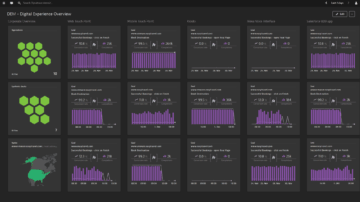

Understand how your applications’ performance impacts your bottom line

To drive revenue, organizations know the key to success is to evaluate applications from a strategic advantage and ensure the digital user experience is positive.

Dynatrace gives you the ability to see applications the way your customers and end users do, which allows teams full operational insight. This insight is the key ingredient to stay competitive in today's market.

- Awareness into where performance matters most

- Know each customers journey and interactions with your application

- Prioritize performance optimization based on real digital business outcomes

- Better collaboration with IT around a common language based on digital experience

We are in the middle of a digital revolution

MIT Technology Review and Dynatrace teamed up to examine how Digital Performance Management allows business and IT teams to measure customer experience.

-

This exclusive report describes how to close the customer-experience gap through digital-performance management (DPM). Report offers benefits and insights on getting started with DPM today.

-

This infographic highlights how Digital Performance Management (DPM) integrates IT system performance with digital business performance so all stakeholders can speak the same language and work towards the same goals.

-

This introduction to DPM explains how this emerging approach can provide serious business value

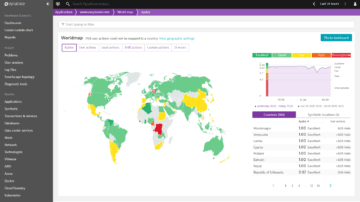

Proactively monitor end user experience

Don't get caught off guard! Dynatrace analyzes each customers digital journey in real-time to evaluate satisfaction.

- Simulate web visits and mobile app usage to resolve problems before they affect your customers with Dynatrace synthetic monitoring

- Deep dive into each action and isolate performance issues instantly

- Complete view across all your web pages and native/hybrid mobile apps

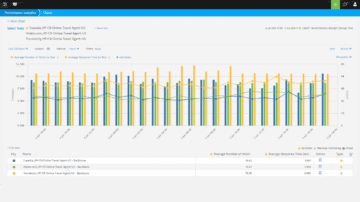

Fast complaint resolution is key to customer satisfaction

With the rise of social media, users hold all the power. A disappointing user experience can be detrimental to your brand.

- End users don't want websites and web applications that are slow or worse crash

- Delays can result in almost 50% abandonment

- Research shows fast and fluid online interactions translate into positive and engaging experiences

- A positive end user experience protects your brand and preserves customer loyalty

Obtaining real user experience is vital for companies doing business online. Dynatrace recognizes the challenges of the multi-channel customer experience and the need for a user-centric approach for modern web and mobile applications.

- Automatic detection and alerts to slow response times and outages

- Fix emerging problems before users are negatively impacted

Try it free