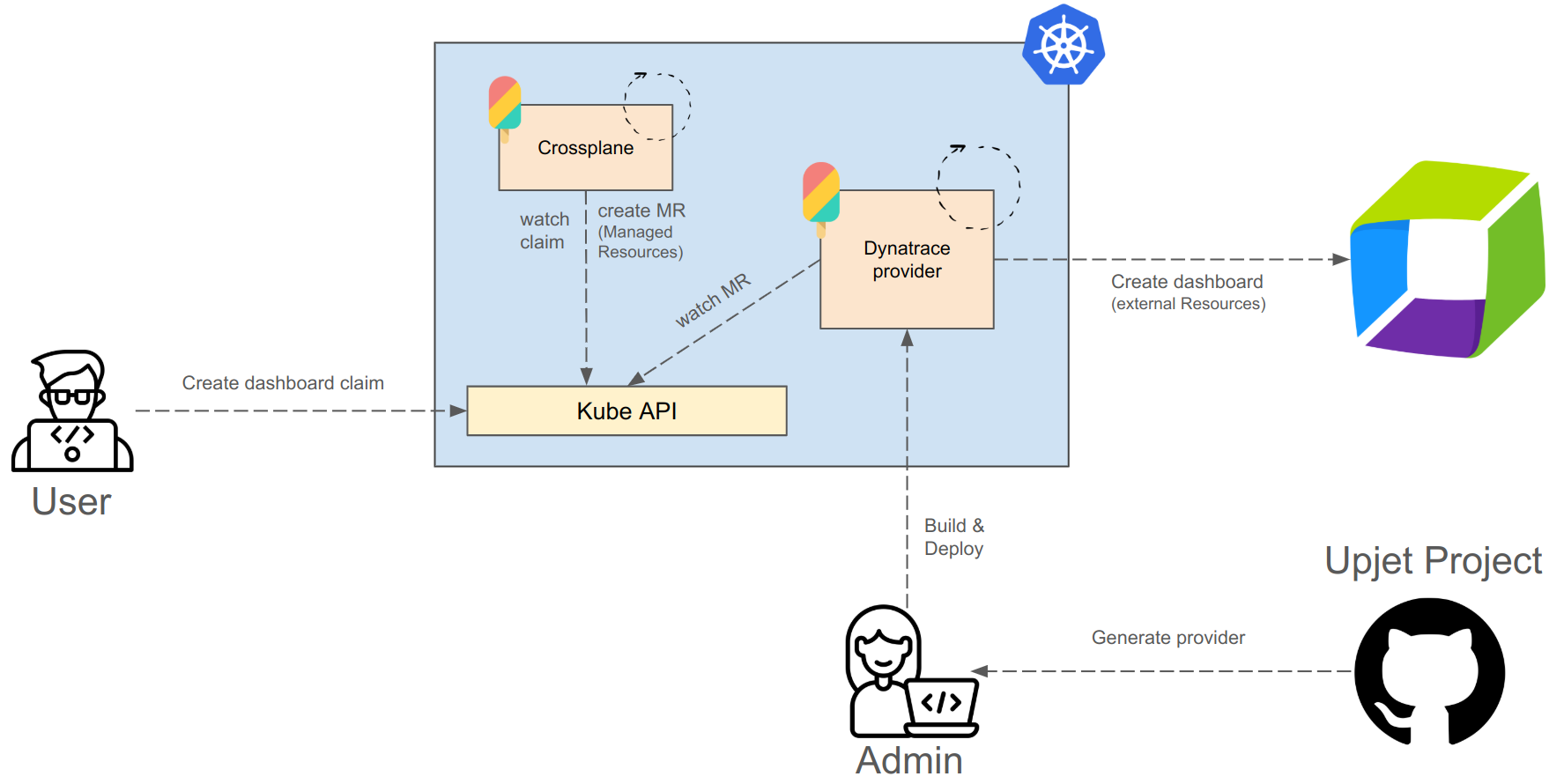

In today’s rapidly evolving cloud-native landscape, managing observability efficiently can be a game-changer for any platform. During our recent tech talk at #KCDAustria, Observability as Code – DIY with Crossplane, we demonstrated how the Upjet project can streamline the creation of a custom Crossplane Dynatrace provider, unlocking robust observability capabilities in Kubernetes environments. This blog post covers what we did and how we did it.

In this blog post, we will deploy a monitoring dashboard with alerts and notifications as one simple Kubernetes resource. To help us achieve our goal, we have to set up a Kubernetes cluster and infrastructure components. But before that, we need to discuss some important concepts and patterns.

Configuration as Code

Managing vast amounts of configurations for organizational setups at scale is a hard problem to solve since they span over many tools and providers and have many different contributors.

However, there is a pattern for remedying many of these problems: treating these configurations as declarative code instead of applying changes manually.

Originally dubbed Infrastructure as Code, the pattern can be generalized and used for anything that provides a proper interface—simply put, Configuration as Code.

While many tools and their respective approaches exist to write and apply such configuration, one of the most interesting recent developments is extending Kubernetes and using its readily available REST API and reconciliation loops.

The operator pattern in Kubernetes

The mechanism that Kubernetes provides for interface extension is called the operator pattern. This is a powerful mechanism for automating the management of complex applications. It extends the Kubernetes API via custom resource definitions (CRDs), enabling new object types to be created. These are constantly watched by custom controllers, so-called operators.

Operators are designed with a “reconciliation loop,” meaning they continuously compare a resource’s real state against its desired state. When a resource deviates, the operator brings it back into alignment. This is the essence of Kubernetes automation and declarative infrastructure.

Crossplane and the operator pattern

Crossplane builds on the operator pattern and extends Kubernetes beyond managing containerized workloads. Through the Kubernetes API, it enables you to define and provision—among other things—cloud infrastructure resources such as databases, compute instances, and networking components.

Crossplane providers implement the operator pattern for external systems (for example, AWS, GCP, Azure). When you install one, it installs its external resources as Kubernetes native custom resource definitions (CRDs). The provider’s controller watches for changes in the desired state of objects—instanced from these CRDs, reconciling them to ensure they match your expectations.

Compositions

In Kubernetes, low-level resources are managed by high-level resources. Crossplane also allows you to build high-level resources using the Composition pattern.

An example would be an Application resource that abstracts away details like database, network, and compute needs. A Crossplane composition enables you to build something like this, giving you control over the new interface (also a Kubernetes CRD) and the implementation (which low-level resources are created and how they are created).

Using the Upjet project

Now that we have an overview of the concepts, let’s look at how we implement our demo. When building a custom Crossplane provider, we can take two approaches: build the provider from scratch or leverage an existing tool. We opted for the latter, by using the Upjet project, which automates the creation of Crossplane providers based on existing Terraform providers. Here’s why:

- Speed and simplicity: Writing a provider from scratch requires a deep understanding of the external system API and how Crossplane manages resources. Upjet allows us to generate a provider much faster by transforming Terraform provider schemas into Crossplane CRDs, significantly reducing the development time.

- Reuse of Terraform providers: Upjet allows us to tap into the vast ecosystem of Terraform providers. Since there are already many well-established Terraform providers for various cloud platforms and services, using Upjet means we don’t have to reinvent the wheel.

Generating a new Crossplane provider with Upjet

Creating a new Crossplane provider using Upjet is a streamlined process that allows you to extend Crossplane’s capabilities with minimal setup. Follow the official Upjet documentation to get started.

In our demonstration at the KCD Austria tech talk, we showcased how to build a Dynatrace provider. Below are the detailed steps we followed.

Step 1: Adjust the Makefile

The Makefile needs to reference the official Dynatrace Terraform module. This adjustment allows Upjet to use the correct source when generating the provider.

Here’s an example configuration:

export TERRAFORM_PROVIDER_SOURCE ?= dynatrace-oss/dynatrace

export TERRAFORM_PROVIDER_REPO ?= https://github.com/dynatrace-oss/terraform-provider-dynatrace

export TERRAFORM_PROVIDER_VERSION ?= 1.66.0

export TERRAFORM_PROVIDER_DOWNLOAD_NAME ?= terraform-provider-dynatrace

export TERRAFORM_PROVIDER_DOWNLOAD_URL_PREFIX ?= https://releases.hashicorp.com/$(TERRAFORM_PROVIDER_DOWNLOAD_NAME)/$(TERRAFORM_PROVIDER_VERSION)

export TERRAFORM_NATIVE_PROVIDER_BINARY ?= terraform-provider-dynatrace_v1.66.0

export TERRAFORM_DOCS_PATH ?= docs/resources

These settings specify the source, version, and download paths for the Dynatrace Terraform provider that Upjet will wrap as a Crossplane provider.

Step 2: Configure Provider Resources

Set Up the Provider Config

To configure the connection details, we need to modify internal/clients/dynatrace.go to reference the secret structure expected for the provider. In this case, define tenantURL and apiToken for Dynatrace connectivity:

const (

tenantURL = "dt_env_url"

apiToken = "dt_api_token"

)

Then, reference these credentials in the TerraformSetupBuilder:

// TerraformSetupBuilder builds a Terraform setup function, returning provider configuration.

func TerraformSetupBuilder(version, providerSource, providerVersion string) terraform.SetupFn {

return func(ctx context.Context, client client.Client, mg resource.Managed) (terraform.Setup, error) {

...

// Set credentials in the provider configuration.

ps.Configuration = map[string]any{}

if v, ok := creds[tenantURL]; ok {

ps.Configuration[tenantURL] = v

}

if v, ok := creds[apiToken]; ok {

ps.Configuration[apiToken] = v

}

}

}

Define External Name Configurations

To identify external names for resources, update config/external_name.go by adding mappings for the Dynatrace resources:

// ExternalNameConfigs contains all external name configurations for this provider.

var ExternalNameConfigs = map[string]config.ExternalName{

"dynatrace_alerting": config.IdentifierFromProvider,

"dynatrace_email_notification": config.IdentifierFromProvider,

"dynatrace_json_dashboard": config.IdentifierFromProvider,

"dynatrace_metric_events": config.IdentifierFromProvider,

}

This setup ensures that each resource is correctly identified using the provider’s unique identifier.

Add Custom Configurations for Resources

For each resource, create a corresponding config subfolder and add a config.go file with a Configure function. This function customizes the resource’s configuration and short group name as needed:

config/alerting/config.go

package alerting

import "github.com/crossplane/upjet/pkg/config"

// Configure customizes individual resources.

func Configure(p *config.Provider) {

p.AddResourceConfigurator("dynatrace_alerting", func(r *config.Resource) {

r.ShortGroup = "alerting"

})

}

Repeat this for other resources, such as Dashboard, Event, and Notification.

config/dashboard/config.go

package dashboard

import "github.com/crossplane/upjet/pkg/config"

// Configure customizes individual resources.

func Configure(p *config.Provider) {

p.AddResourceConfigurator("dynatrace_json_dashboard", func(r *config.Resource) {

r.ShortGroup = "dashboard"

})

}

config/event/config.go

package event

import "github.com/crossplane/upjet/pkg/config"

// Configure customizes individual resources.

func Configure(p *config.Provider) {

p.AddResourceConfigurator("dynatrace_metric_events", func(r *config.Resource) {

r.ShortGroup = "event"

})

}

config/notification/config.go

package notification

import "github.com/crossplane/upjet/pkg/config"

// Configure customizes individual resources.

func Configure(p *config.Provider) {

p.AddResourceConfigurator("dynatrace_email_notification", func(r *config.Resource) {

r.ShortGroup = "notification"

})

}

Register Custom Configurations

To ensure these custom configurations are applied, register each Configure function in config/provider.go:

import (

"github.com/xoanmi/provider-dynatrace/config/alerting"

"github.com/xoanmi/provider-dynatrace/config/event"

"github.com/xoanmi/provider-dynatrace/config/dashboard"

"github.com/xoanmi/provider-dynatrace/config/notification"

)

for _, configure := range []func(provider *ujconfig.Provider){

alerting.Configure,

event.Configure,

notification.Configure,

dashboard.Configure,

} {

configure(pc)

}

This setup allows Upjet to apply each resource’s configuration during the provider generation process, customizing each resource group as specified.

Step 3: Generate the Code

Once all the necessary configurations are in place, you’re ready to generate the provider code by running the following command:

The make generate command will use the settings specified in the previous steps to:

- Generate the Crossplane provider code based on the Terraform provider configurations.

- Create the necessary Kubernetes Custom Resource Definitions (CRDs) for each resource, allowing Crossplane to manage them.

Running make generate will produce output similar to the following, showing the installation of required tools and the generation of the provider schema and resource CRDs:

➜ make generate

11:38:21 [ .. ] installing terraform darwin-arm64

…

11:38:22 [ OK ] installing terraform darwin-arm64

11:38:22 [ .. ] generating provider schema for dynatrace-oss/dynatrace 1.66.0

11:38:24 [ OK ] generating provider schema for dynatrace-oss/dynatrace 1.66.0

11:38:26 [ .. ] go generate linux_arm64

Generated 4 resources!

11:38:59 [ OK ] go generate linux_arm64

11:38:59 [ .. ] go mod tidy

11:39:00 [ OK ] go mod tidy

➜ tree package/crds

package/crds

├── alerting.crossplane.io_alertings.yaml

├── dashboard.crossplane.io_dashboards.yaml

├── dynatrace.crossplane.io_providerconfigs.yaml

├── dynatrace.crossplane.io_providerconfigusages.yaml

├── dynatrace.crossplane.io_storeconfigs.yaml

├── event.crossplane.io_events.yaml

└── notification.crossplane.io_notifications.yaml

With the generated code and CRDs in place, you can now deploy the provider and begin managing Dynatrace resources in your Kubernetes environment.

Step 4: Deploy and run

In the setup, we’re going to run the operator locally while applying and connecting to a Kubernetes cluster (where this cluster runs doesn’t matter, as long as it’s reachable).

First, we apply the CRDs make generate has created.

kubectl apply -f package/crds

Once this is done, you can run the operator itself.

make run

The last missing part is adding the credentials the operator needs to connect to the chosen Dynatrace tenant. The necessary token is in the Access management documentation.

Create the namespace and a secret containing your brand-new access token.

apiVersion: v1

kind: Secret

metadata:

name: example-creds

namespace: crossplane-system

type: Opaque

stringData:

credentials: |

{

"dt_env_url": "https://my-tenant.com",

"dt_api_token": "my-secret-token"

}

kubectl create namespace crossplane-system

kubectl apply -f example-creds.yaml

Finally, the last puzzle piece, the ProviderConfig, can be created:

apiVersion: dynatrace.crossplane.io/v1beta1

kind: ProviderConfig

metadata:

name: default

namespace: crossplane-system

spec:

credentials:

source: Secret

secretRef:

name: example-creds

namespace: crossplane-system

key: credentials

All done! The previously generated CRDs are now available and their objects in your Kubernetes cluster will result in entities and changes in your Dynatrace tenant. Try it out with a dashboard resource!

apiVersion: dashboard.crossplane.io/v1alpha1

kind: Dashboard

metadata:

name: example-dashboard

namespace: crossplane-system

spec:

forProvider:

contents: |

{

"dashboardMetadata": {

"name": "Our small example dashboard",

"owner": "my@mail.com",

"preset": true,

"hasConsistentColors": true

},

"tiles": [

{

More config…

}

}

kubectl apply -f example-dashboard.yaml

FAQ

Several key questions were raised during the talk and in the following discussions. We’ve summarized the main points:

Q: Why use Crossplane when Terraform can do the same thing?

A: It’s not a matter of “should” versus “shouldn’t.” If you’re already invested in Kubernetes, Crossplane allows you to manage cloud resources while leveraging the same tooling you use to deploy, maintain, and monitor your applications. This makes Crossplane highly convenient for teams already embedded in the Kubernetes ecosystem.

Additionally, these tools don’t exclude each other. One is used to build platforms, and the other is a command-line tool. Their potential use cases differ quite a lot.

Q: How is state management handled?

A: With the Upjet approach, you’re essentially bridging two worlds. Kubernetes manages the state of each object through its etcd system. Simultaneously, the Crossplane operator runs Terraform in the background, continuously reconciling the state between the Kubernetes Custom Resource (CR) and the Terraform-managed infrastructure.

Q: Can I use the Upjet approach in production?

A: Yes, you can, but remember that the provider uses Terraform under the hood. This means that during each reconciliation loop, a terraform plan and terraform apply run. Due to the nature of these continuous operations, managing a large number of resources this way could demand significant resources.

While the Upject project is very good at translating the provider, some things need to be added manually. The concept of Kubernetes labels simply doesn’t exist in Terraform. If you want to utilize them, you need to implement them yourself.

Q: How does the mapping between Terraform objects and Kubernetes Custom Resources (CRs) work?

A: The mapping is defined in the `/config` folder when configuring the provider. Here, we specify the relationship between the Terraform object and the corresponding Kubernetes CR. Running the `make generate` command triggers the generation of all necessary code, including the API, client, provider, and CRD (Custom Resource Definition). This allows Kubernetes to manage the Terraform-defined resource seamlessly.

Q: I read about the proposal for Crossplane v2.0. Do you know if that will impact the described provider creation process?

A: Recently, the Crossplane developers created a draft for the next version of Crossplane. Here, they talk in-depth about how they want to change composite resources and their structure. This will impact provider creation since they reconcile aforementioned resources. This section discusses the proposed changes. The developers also plan on keeping things backward compatible. For now, we

have to wait and see what the final implementation looks like.

Get started

Crossplane enables a seamless cloud-native approach for managing any cloud resource by extending the Kubernetes API. By leveraging Kubernetes as a control plane and using Crossplane compositions, you can declaratively define and automate your entire observability stack.

It’s easy to get started, all you need to start is

If you’re interested in diving deeper, you can check out the following resources from our session:

We hope this talk inspired you to explore Crossplane for your infrastructure automation needs and provided valuable insights into building observability solutions using the power of Kubernetes and declarative infrastructure.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum