For cloud operations teams, network performance monitoring is a required but often ineffective task in observability. They need end-to-end visibility into multicloud and hybrid cloud environments to fully grasp activities on the network.

For cloud operations teams, network performance monitoring is central in ensuring application and infrastructure performance.

If the network is sluggish, an application may also be slow, frustrating users. Worse, a malicious attacker may gain access to the network, compromising sensitive application data.

Still, despite having adopted myriad network monitoring tools, organizations still struggle to identify whether the network is at fault for application and infrastructure problems.

According to 2022 data, 78% of companies plan to increase spending on network visibility tools over the next two years, according to Shamus McGillicuddy, vice president of research at Enterprise Management Associates. Network traffic growth is the main reason for increasing spending, largely because of the adoption of hybrid and multi-cloud architectures.

As organizations increasingly turn to cloud architecture to achieve their goals, they face a complexity wall: There are too many applications, services, hosts, data flows, and interdependencies between these entities to manage with human effort alone.

According to recent research, 99% of organizations have a multicloud environment, and 82% also use hybrid cloud infrastructure.

“Cloud is underpinning everything,” said Krzysztof Ziemianowicz, a Dynatrace senior product manager, at a Dynatrace Perform conference session on network performance monitoring. “It’s more complex than it sounds.”

As cloud entities multiply, along with greater reliance on microservices and serverless architectures, so do the complex relationships and dependencies among them. All these cloud-based assets and interrelationships generate massive amounts of data that need to be managed and understood in the context of their cloud environments. Every link in the chain, and the flow of data between them, affects a site reliability team’s ability to meet its service-level objectives.

Network performance monitoring core to observability

For these reasons, network activity becomes a key data source in IT observability. Teams need visibility into all dependencies and end-to-end traces for each and every session, including cloud and enterprise network traffic, to proactively identify risks to performance and security.

“The network is still required. Without the network, nothing will happen,” Ziemianowicz said.

Many organizations, somewhat erroneously, respond to cloud complexity by using multiple tools to monitor and manage system health. According to some data, organizations use an average of 10 network monitoring tools across their technology stacks because they lack a unified view of network activity.

But this approach merely perpetuates data silos and cloud complexity.

Organizations need to move from traditional, siloed monitoring practices—where teams track problems within a single domain but don’t identify causation or interdependencies—to a unified observability strategy.

“Ultimately, observability is about connection, not merely collection,” wrote Sudip Datta in “See What Really Matters: Why You Need Observability vs. Monitoring.”

There are several aspects of effective cloud observability and network performance monitoring, including unified data, topological mapping of data, and automation of tasks.

1. Unifying data, unifying observability

Merging siloed data streams in a unified observability strategy requires a different approach.

Gathering data from multiple tools is no longer sustainable as data volumes increase and cloud assets multiply.

This starts with a different approach to data aggregation. Rather than collecting and trying to analyze data in multiple tools (or pools), organizations can now ingest network, security, application, and business event data into a single repository for a unified view and analysis.

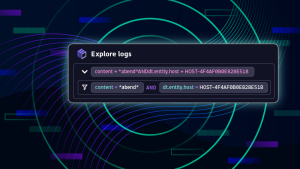

For Dynatrace, that unified repository comes in the form of Dynatrace Grail —a massively parallel processing data lakehouse that enables teams to analyze data without having to store some at lower cost in “cold storage” and “rehydrate” the data to make it usable in the moment. Teams also don’t have to maintain normalized schemas to query data. Instead, they can explore raw data without predetermining the questions to which they want answers.

2. Topological mapping of data

Topological mapping of data is also key. With a topological rendering of cloud applications, services, hosts, and more, teams can identify the complex relationships between entities. In turn, they can identify the root cause of a problem, the multiple targets it may have affected, and prioritize the remediation of these issues. For Dynatrace, that topological view comes in the form of Smartscape.

“Cloud [computing] creates complexity of topology that is beyond human recognition and can’t be modeled a priori because it changes all the time,” Ziemianowicz said.

He noted that Dynatrace’s topological approach is distinctive because it can map all entities, automatically end to end. That provides significant visibility into the network performance determinants.

“We can model topology relationships between entities that are known to load balancers and entities that are monitored by OneAgent,” Ziemianowicz said. “This lets us analyze dependencies along the application delivery chain and across an end-to-end application infrastructure.”

Together with a data lakehouse, teams can now analyze petabytes of data without predetermining queries or incurring massive cost to keep data at the ready.

Bringing network performance monitoring to cloud-native and on-premises architectures with Dynatrace

According to Ziemianowicz, networking performance monitoring in Dynatrace can address various problems and vexing networking questions that organizations need to ask:

- What is the status of network flows on which my apps depend?

- What has been happening on the network when apps experience issues?

- What are the issues with traffic losses and connectivity drops?

- What is the performance status of supporting network devices?

- How do the app hosts experience network performance?

Additionally, Dynatrace has partnered with Gigamon to understand on-premises network traffic flows. “Gigamon is getting those into Dynatrace with the packet observability framework,” Ziemianowicz said. With Gigamon integration, Dynatrace provides network traffic analytical insights exceeding typical NetFlow- level of details, thanks to application-aware data enrichments by Gigamon.

Dynatrace is working to wrap these capabilities into a comprehensive application, to be available on the Dynatrace platform.

3. Automation, automation, automation

Another component of effective observability is causal AI, which enables the platform to ingest and provide data with its full context to users. Causal AI is also critical in topological mapping, providing teams with a full view of all entities and dependencies, automatically.

In the Dynatrace platform, causal AI comes in the form of Davis. The AI engine AI delivers precise answers about the IT environment. These answers are instant, automatic, and continuous. Explore how Davis drives automation and delivers broader, deeper insight into your environment.

Layering automation on top of topological tools offers precise answers about the root cause of incidents, a prioritization of events to remediate, and possibly offers automated corrective action.

“Full-stack observability solutions allow multiple IT (and business) teams to have a common end-to-end performance view of systems across applications, multiple clouds, infrastructure, and network segments, using advanced analytics models and automation capabilities to identify and solve problems, deliver consistent service levels, readily activate new service capabilities, and act in an automated and accurate fashion,” wrote Stephen Elliott and Mark Leary in the IDC report “Expanding the Digital Experience and Impact with Advanced Business Context.”

“For cloud operations teams, network performance monitoring is a required but often incomplete task in their observability practice. They need end-to-end visibility into multicloud and hybrid cloud environments to fully grasp activities on the network.”

For more on Dynatrace and network performance monitoring, click here. For the complete session on networking and observability, click here.

Download this network performance monitoring handbook to learn what effective network performance monitoring requires and how to achieve end-to-end visibility into multicloud and hybrid cloud environments.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum