How do you get more value from petabytes of exponentially exploding, increasingly heterogeneous data? The short answer: The three pillars of observability—logs, metrics, and traces—concentrated in a data lakehouse.

As observability and security data converge in modern multicloud environments, there’s more data than ever to orchestrate and analyze. The goal is to turn more data into insights so the whole organization can make data-driven decisions and automate processes.

Dynatrace Chief Technology Officer Bernd Greifeneder and his colleagues discuss how organizations struggle with this problem and how Dynatrace is meeting the moment.

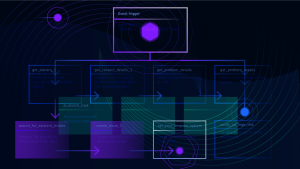

Grail data lakehouse delivers massively parallel processing for answers at scale

Modern cloud-native computing is constantly upping the ante on data volume, variety, and velocity. As teams try to gain insight into this data deluge, they have to balance the need for speed, data fidelity, and scale with capacity constraints and cost. To solve this problem, Dynatrace launched Grail, its causational data lakehouse, in 2022. Grail combines the big-data storage of a data warehouse with the analytical flexibility of a data lake.

“With Grail, we have reinvented analytics for converged observability and security data,” Greifeneder says. With a data lakehouse approach, Dynatrace captures all the data in one place while retaining its context and semantic details. These details include all the relationships among data from different sources. This unified approach enables Grail to vault past the limitations of traditional databases.

From this central platform, teams can use the Dynatrace query language (DQL) to build flexible queries that reveal the stories hidden in their data. “This means you can ask any question anytime, all the unknown unknowns,” Greifeneder says.

Grail is schemaless, indexless, and lossless. With no schema, you don’t have to think of all the questions you want to ask of your data ahead of time. And with no indexes, teams can access data faster without having to maintain a massive index that quickly falls out of date. Because the data is lossless, teams from across the organization can query the data with its full details in place. This completeness meets the needs of IT professionals and business analysts alike.

“Grail can store and process 1,000 petabytes per day,” Greifeneder explains. And without the encumbrances of traditional databases, Grail performs fast. “In most cases, especially with more complex queries, Grail gives you answers at five to 100 times more speed than any other database you can use right now.”

Three pillars of observability converge with context and business data at the massive scale of Grail

The Dynatrace Software Intelligence Platform has always brought the full value of logs, metrics, and traces together with context and user data for AI-powered analysis. Now, that same full-spectrum value is available at the massive scale of the Dynatrace Grail data lakehouse. “You’re getting all the architectural benefits of Grail—the petabytes, the cardinality—with this implementation,” Greifeneder says, including the three pillars of observability: logs, metrics, and traces in context.

Logs on Grail

Log data is foundational for any IT analytics. Logs on Grail, included in the 2022 release, enables an endless variety of log-based use cases. For example, these include verifying app deployments, isolating faults coming from a single IP address, identifying root causes of traffic spikes, or investigating malicious user activity.

The Business Events capability enables business analysts to get the real-time insights and broad context they need to answer questions their business intelligence tools can’t.

But logs are just one pillar of the observability triumvirate.

Metrics on Grail

“Metrics are probably the best understood data type in observability,” says Guido Deinhammer, CPO of infrastructure monitoring at Dynatrace. Simply put, metrics are the counts and measures that teams calculate or aggregate over time as the basis for analysis.

But the process of aggregating data in traditional environments results in losing fidelity. These are crucial details that can help analysts understand data’s relevance in different scenarios, Deinhammer says.

In addition, the scale of relationships among data in microservices environments is crippling conventional databases. “Today, you can have a microservice environment with hundreds of thousands of ports talking to 100,000 other ports, each of which is recycled every couple of minutes,” Deinhammer explains. “This creates such high cardinality that it blows up existing metrics databases.”

With its massively parallel architecture, Grail can ingest and process high cardinality data at the scale of modern cloud architectures without losing fidelity. And with automatic analysis from Dynatrace Davis AI, Deinhammer continues, “if there is a problem, you get answers at a level of detail that allows you to automate remediation well before your end users notice.”

Traces on Grail

Traces are another area where cloud-native technologies have complicated application and microservices monitoring. Distributed traces are the path a transaction takes as it touches applications, services, and infrastructure from beginning to end.

Open source solutions are also making tracing harder. “OpenTelemetry, for example, is a way to get metrics, logs, and traces into an observability platform like Dynatrace,” explains Florian Ortner, CPO of applications and microservices at Dynatrace. “With OpenTelemetry, you have spans that provide links and attributes. But the sheer number can be extreme—up to 10 million spans per second.”

Another big trend is Kubernetes and container-based computing, both in the cloud and on premises. “Kubernetes makes spans longer,” Ortner explains. “Microservices get smaller, and you have more hops and additional tiers, such as service meshes.”

As a centralized data platform, Dynatrace makes it possible to keep ever-lengthening spans intact. With Grail, teams can track increasingly long and complex distributed traces with full fidelity and context at a massive scale.

Smartscape on Grail: Context at scale

Without a centralized view of what’s happening in a distributed multicloud environment, teams lack situational awareness.

Smartscape interactive topology mapping visualizes the dynamic relationships among all application components across every tier. It gives teams visibility into the dependencies throughout their environment. “With Smartscape on Grail with DQL, you can run queries across multiple data types throughout your entire topology,” Greifeneder says. This access gives teams an unprecedented ability to answer more complex questions than they could before.

Smartscape with DQL also adds new possibilities for automation. “If a team builds a new service or changes an API, how do you know it’s not breaking a dependency you didn’t know about?” Greifeneder asks. “Now, you can ask Grail this question and embed it into your automation so you can have a much smarter and more intelligent rollout. There are so many opportunities. Grail and DQL will give you new superpowers.”

Unleashing a new era of collaborative analytics on Dynatrace Grail

Having the full spectrum of observability data and the AI-driven value of the Dynatrace platform running on the unified Grail data lakehouse unlocks a whole new era of collaborative analytics for users across the organization. To make that collaboration even easier, Greifeneder and his colleagues announced several new UI and analytics capabilities, including the following:

- an enhanced UI that makes it easier to scan machine data and drill down on individual data points without having to switch contexts;

- new dashboarding capabilities that draw data from everywhere and enable analysts to fine-tune data views using markdown, custom code, and variables; and

- a new Notebooks feature that enables cross-functional teams to create custom analytics for all kinds of use cases.

For more about the new Notebooks feature, see “Dynatrace makes it easy for observability, security, and business data analysis.”

Learn more about the announcements at Perform 2023 in the Perform 2023 Guide: Organizations mine efficiencies with automation, causal AI.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum