Modern cloud computing environments are accelerating innovation and productivity. But they can also cause cloud costs and energy consumption to balloon if they're not well architected, monitored, and optimized. Cloud cost optimization and managing cloud costs are now a major priority for organizations to save money and reduce their carbon footprint.

Business innovation costs money. But organizations may not always have insight into how their innovation initiatives generate costs as well as revenue. That’s why cloud cost optimization is becoming a major priority regardless of where organizations are on their digital transformation journeys.

From managing cloud cost with the major providers to the increasing compute costs that generative AI and large language models (LLMs) create—and the carbon footprint they consume—the cost of innovation is affecting bottom lines across the industry.

In fact, Gartner’s 2023 forecast is for worldwide public cloud spending to reach nearly $600 billion. To put that into perspective, analysts at venture capital firm Andreessen Horowitz report that many companies spend more than 80% of their total capital raised on compute resources.

Generative AI and LLMs are compounding these figures. For example, a CNBC report found that training just one LLM can cost millions, then millions more to update.

These costs also have an environmental impact. A Cloud Carbon Footprint report found that global greenhouse gas emissions from technology rival or exceed the aviation industry.

Bernd Greifeneder, Dynatrace founder and CTO, acknowledged at Dynatrace Perform 2024 that even his team suffers from tool sprawl.

“CNCFs, thousands of tools, they pick up everything,” Greifeneder said. A platform like Dynatrace with Grail data lakehouse and AppEngine helps teams monitor and manage cloud costs associated with these tools and services. “We had many do-it-yourself tools that integrated with security and did some other import tasks,” Greifeneder said. “We shifted all that onto the [Dynatrace] platform so we have compliance and privacy issues solved.”

The cost of tool sprawl

The cost of tool sprawl is not just the tool itself. Maintaining consistency, updates, and collaboration among the data and the teams that use the various tools means they waste time building and maintaining integrations while potentially losing important data and its context.

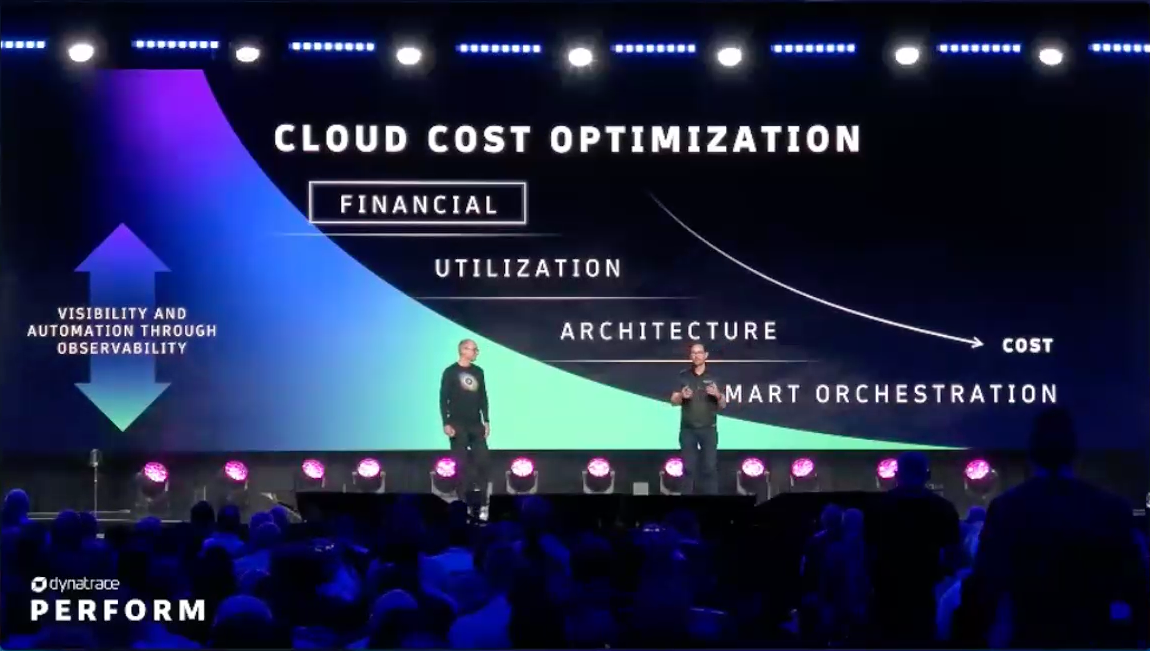

“Every dollar we spend on cloud [infrastructure] is a dollar less we can spend on innovation and customer experience,” said Matthias Dollentz-Scharer, Dynatrace chief customer officer. Dollentz-Scharer outlined four phases of cloud cost optimization and how Dynatrace helps with each.

- Financial. Dynatrace enhances platform optimizations negotiated with cloud providers by automating savings plans and cost allocations and giving teams insights into how they use cloud resources.

- Utilization. By tracking over- and under-utilized machines, Dynatrace helps teams track underutilized machines and minimize overprovisioning.

- Architecture. The Dynatrace platform tracks workload and dependencies among resources. With its topology mapping and dependency tracking, Dynatrace provides process-level tools that help determine which processes use what resources to aid with troubleshooting and optimization.

- Smart orchestration. By ensuring automated workloads run most cost-effectively, Dynatrace monitors automated workloads, such as in Kubernetes environments, to help teams eliminate redundant services for further cost savings.

How cloud cost optimization mitigates the effects of tool sprawl

For example, the Dynatrace team investigated its Amazon Elastic Block Store (EBS) usage. To discover why EBS usage was growing in relation to the Dynatrace architecture, the team used Dynatrace Query Language (DQL) and instrumentation notebooks. These tools helped them determine what processes were using the resources. “The team tweaked the Dynatrace [AWS] deployment and automated discrete sizings in Kafka, which helped us save a couple of million dollars,” Dollentz-Scharer said.

“When we started deploying Grail in several hyperscaler locations, we were able to use more predictive AI from our Davis AI capabilities to make our orchestration even smarter,” Dollentz-Scharer continued. That enabled the Dynatrace team to reduce its EBS usage by 50%.

Likewise, when an automation glitch deployed a batch of unneeded Kubernetes workloads into a new Grail region, Dynatrace instrumentation and tooling surfaced the problem. The team fixed it within 48 hours, enabling them to manage cloud costs and save resources.

Reducing cloud carbon footprint

A driving motivation for cloud cost optimization and resource utilization awareness is climate change. The effects of global climate change are evident everywhere, from wildfires to hurricanes to catastrophic flooding.

As a result, Dynatrace introduced the Carbon Impact app in 2023. With its unique vantage point over the entire multicloud landscape, its workloads, and interdependencies, Dynatrace helps organizations pinpoint and optimize their carbon usage with precision.

“Everyone has a part to play,” said a representative of a major banking group. “Our commitment is to achieve net 0 carbon operations and reduce our direct carbon emissions by at least 75%, and reduce our total energy consumption by 50%, all by 2030.” The organization has already met its commitment to switch to 100% renewable energy.

The company’s IT ecosystem uses thousands of services across traditional data centers and hybrid cloud environments, and it’s continually growing. Accordingly, he said, “it’s critical that we have observability of our energy consumption and footprint to monitor the impact of this growth over time.”

You can’t manage what you can’t measure

The organization uses Dynatrace to optimize carbon consumption at the data center, host, and application levels. As a result, they’re modernizing data centers from the ground up with sustainability and efficiency in mind and identifying underutilized infrastructure.

“But it’s very important as we do this to find that sweet spot,” the banking group representative said. “We can’t risk the stability or performance of the services.” Critically, Dynatrace helps the group’s team observe how reducing energy consumption relates to resilience.

Implementing “Green-coding principles”

At the application level, the banking group is now applying green coding, a practice that minimizes compute energy consumption. Green coding enables them to optimize and analyze application source code to run more energy-efficient CPU cycles and memory utilization.

“We’ll be introducing quality gates for software and development and testing stages to ensure that any new code is as efficient as possible.” This includes writing code in the most efficient languages for each use case. Dynatrace is a key enabler of the group’s approach to compare a baseline CO2 measurement of their application code before and after each change.

The organization’s proof of concept, a single application programming interface (API), yielded a reduction of around 2 tons of CO2 per year. This promises to yield significant savings when applied to all their applications.

End-to-end observability makes it possible to measure both performance and carbon consumption and to manage cloud costs. “In performance optimization, you’re hunting down milliseconds, and with carbon optimization, you’re hunting down grams,” Dollentz-Scharer observed.

For more about managing and optimizing Kubernetes workloads, read Kubernetes health at a glance: One experience to rule it all.

Check out the Dynatrace Perform 2024 guide for all Perform coverage.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum