Azure Native Dynatrace Service

Transform your enterprise in the cloud with the power of Dynatrace and Microsoft and the Azure Native Dynatrace Service — now available in the Azure marketplace.

Accelerate your journey with unified observability and security

Adopt and modernize faster with Azure Native Dynatrace Service.

Drive better business decisions

Acquire deeper insights into your Azure data.

Streamline deployment

Onboard quickly, automate integration, and get out-of-the-box support for Azure services.

Realize business value faster

Ensure success on Azure with observability, security, and powerful AIOps.

Subscribe in the Azure Marketplace

Retire Azure committed spend and consolidate billing.

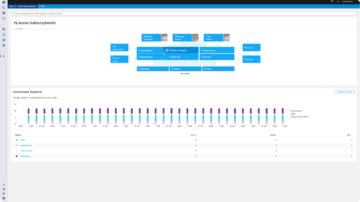

Onboard quickly and easily in the Azure portal

Deploy the Dynatrace Platform in minutes

Ensuring Azure visibility at scale

Experience seamless onboarding

Thanks to Dynatrace’s deep technical integrations with Azure

Explore the Azure Native Dynatrace Service

Start monitoring applications, clusters, and the health of your underlying Azure environments to achieve better business outcomes faster.

Implement an all-in-one-solution

Capture a holistic view across your Azure environment and extended ecosystem.

Automatically identify problems

Find root causes quickly with AI-powered analytics.

Eliminate manual effort

With Dynatrace OneAgent automating deployment, configuration, and updates.

Scale with ease

Automatically scale to meet the diverse needs of your business.

Simplify management

Verify resource metrics and logs sent to Dynatrace and instantly make changes.

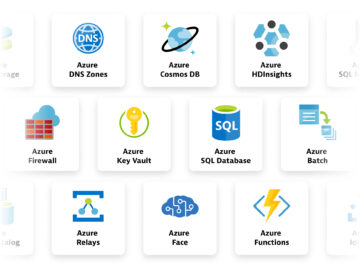

Dynatrace and Azure Resources

BlogNo need to compromise visibility in public clouds with the new Azure services supported by Dynatrace

BlogNo need to compromise visibility in public clouds with the new Azure services supported by Dynatrace BlogPart 2: No need to compromise visibility in public clouds with the new Azure services supported by Dynatrace

BlogPart 2: No need to compromise visibility in public clouds with the new Azure services supported by Dynatrace BlogFull visibility into your serverless applications with AI-powered Azure Functions monitoring (GA)

BlogFull visibility into your serverless applications with AI-powered Azure Functions monitoring (GA)

Get a free trial