MIT physics professor and Future of Life Institute co-founder Max Tegmark shares his big thoughts on the big possibilities of AI to change human innovation.

“Think big. Really big. Cosmically big.” When it comes to artificial intelligence, MIT physics professor and futurist Max Tegmark thinks in terms of 13.8 billion years of cosmic history and the potential of the human race to influence the next 13.8 billion.

In his keynote address at Dynatrace Perform, Tegmark set the stage for AI as the ultimate technology for game-changers. And more importantly, the role of humans in commanding the power in our grasp.

“When we use technology wisely, we can accomplish things our ancestors could only dream of,” Tegmark says. Through the frame of technological accomplishments in the past half-century, Tegmark laid out the possibilities for AI to transform life on earth. Using AI, humankind is already accelerating the capacity to bring forth life-saving technologies, such as diagnosing cancer and solving the protein-folding problem for biomedical research.

“The technology we’re developing is giving life the opportunity to flourish,” Tegmark says. “Not just for the next election cycle, but for billions of years.”

How far will artificial intelligence go?

Max Tegmark defines artificial intelligence simply as the “ability to accomplish complex goals.” The more complex the goals, the more intelligence they call for. There’s no law of physics that precludes artificial general intelligence (AGI), or the ability for technology to learn and accomplish anything a human can. Polls show that most AI researchers expect AGI within decades.

But if a technology can learn like a human through recursive self-improvement, does that mean AI will leave humanity in the dust? Will self-learning technologies create a superintelligence that far exceeds human capacity? And if so, are we doomed or saved?

To answer these questions, Tegmark suggests it’s a matter of perspective. Through human ingenuity, the tech industry has improved computational ability many millions of times since computers were invented. And engineers have extracted only a minute fraction of the energy that is theoretically possible from energy sources. That includes known sources, like gasoline and coal, or what people can possibly extract from other sources.

Max Tegmark sees the enormous benefits of AI as long as humans cultivate the wisdom we need to minimize risks.

Winning the wisdom race with artificial intelligence

“I’m confident we can have an inspiring future with high tech, but it’s going to require winning the wisdom race,” Tegmark says. “The race between the growing power of the technology and the wisdom with which we manage it.”

In the analog world, people learn by making mistakes. If you try something and it fails (or someone dies in a car crash), you adjust the approach (and invent seat belts). But at the scale of AGI, a reactive trial-and-error approach can be costly and potentially catastrophic. Instead, you can begin to proactively predict what could go wrong and apply safety engineering principles.

To help win the wisdom race, Tegmark and four colleagues co-founded the Future of Life Institute, designed to keep powerful technologies going in the right direction. Isaac Asimov’s three laws of robotics were too limited, so Tegmark and his colleagues developed the 23 Asilomar AI Principles, a set of practical and ethical guidelines for developing and applying artificial intelligence. More than 1,000 researchers and scientists worldwide have adopted and signed these principles.

Aligning AI’s goals with our own

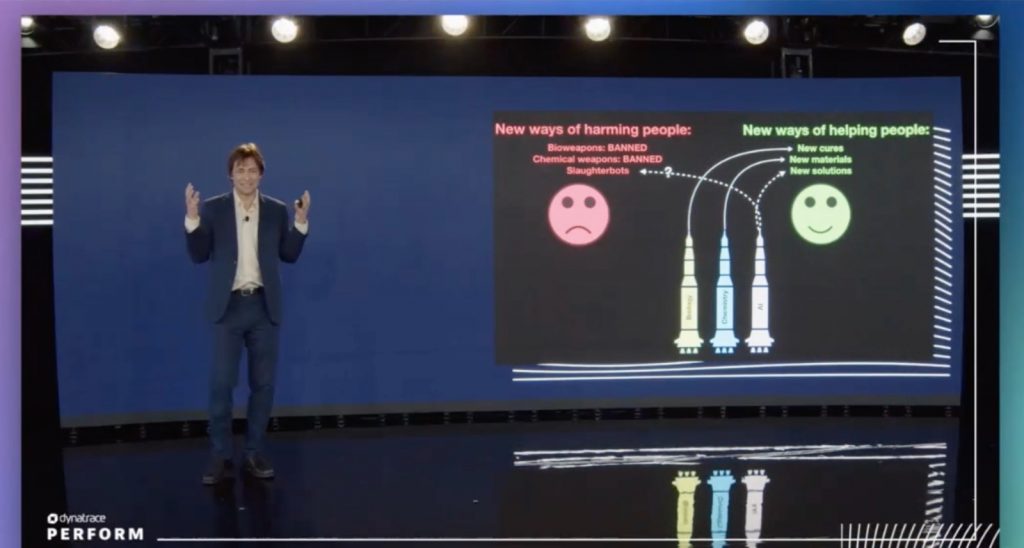

“Any science can be used as a new way of harming people or a new way of helping people,” Tegmark says. To illustrate, he shares three of the 23 Asilomar Principles:

- Avoid a destabilizing arms race in lethal autonomous weapons. We shouldn’t allow AI algorithms to decide to kill people.

- Mitigate AI-fueled inequality. We should share the great wealth artificial intelligence helps produce so everyone is better off.

- Invest in AI safety research. This effort can make systems robust, secure, and trustworthy.

AGI safety requires what Max Tegmark calls “AI alignment.”

“The biggest threat from AGI is not that it’s going to turn evil, like in some silly movie,” Tegmark says. “The worry is it’s going to turn really competent and accomplish goals that aren’t aligned with our goals.” For example, one way to look at the extinction of the West African black rhino is that humans’ goals weren’t aligned with the rhinos’ goals.

So humanity doesn’t go the way of those rhinos, we must design AI to understand, adopt, and retain our goals. “This way we can steer AGI to accomplish our goals for an inspiring future,” Tegmark explains.

Envision an amazing future, not a dystopic one

As any captain of industry knows, a positive vision is essential for business success. Once you know where you want to go, then you can identify the problems and potential pitfalls. Instead of imagining a dystopic future, we should envision an amazing future. The United Nations’ 17 Sustainable Development Goals, for example, provide a roadmap to a future in which humanity thrives.

“These are challenging and noble goals adopted by nearly every country on earth,” Tegmark says. “Artificial intelligence can help us attain these sustainability goals better and faster. As we continue toward AGI and beyond, let’s not just aim toward them by 2030, let’s accomplish all of them and raise our ambition to go beyond them.”

As individuals, we have an important role to figure out how to steer artificial intelligence and make these changes happen.

As a company, Dynatrace and its causation-based Davis AI are building this future for customers by delivering the vision of a world where software works perfectly.

For the 30,000 AI game-changers and technologists attending Dynatrace Perform across the world, Professor Tegmark gave an assignment. “Be proactive. Think in advance about how to steer technology and where you want to go with it. We will be the masters of our own destiny by actually building it.”

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum