In recent years, function-as-a-service (FaaS) platforms such as Google Cloud Functions (GCF) have gained popularity as an easy way to run code in a highly available, fault-tolerant serverless environment.

In a time when modern microservices are easier to deploy, GCF, like its counterparts AWS Lambda and Microsoft Azure Functions, gives development teams an agility boost for delivering value to their customers quickly with low overhead costs.

What is Google Cloud Functions?

Google Cloud Functions is a serverless compute service for creating and launching microservices. The service pairs ideally with single-use functions that tie into other services and is intended to simplify application development and accelerate innovation. GCF is part of the Google Cloud Platform.

Although GCF adds needed flexibility to serverless application development, it can also pose observability challenges for DevOps teams.

Where does Google Cloud Functions fit in the GCP environment?

GCF runs on the Google Cloud Platform (GCP), a comprehensive collection of over 100 services spread across 18 functional categories. Think of GCP as a suite, whereas GCF is a specialized service within it. The headlining feature of GCP is Google’s Compute Engine, a service for creating and running virtual machines in the Google infrastructure—a direct analog to AWS’ EC2 instances and Azure’s VMs.

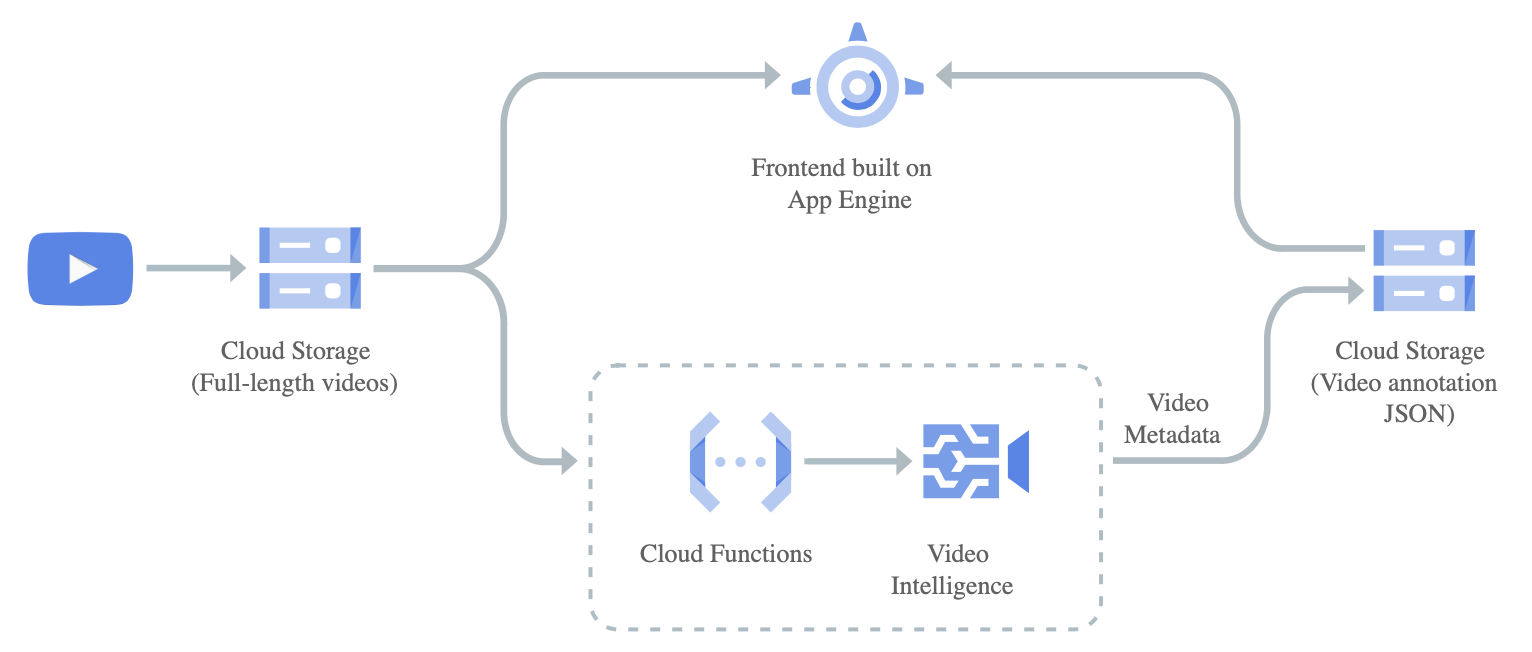

GCF pairs well with Google Cloud Build, which enables testing and other CI/CD tasks based on functions originating in GCF. Additionally, while you might use something like Google App Engine to build frontends, you can pair those interfaces with GCF backends.

How Google Cloud Functions works

GCF operates under the following three tenets:

- Simplify the developer experience

- Avoid lock-in with open-source technologies

- Pay only for accumulated usage

Scalability is a major feature of GCF. As with other FaaS platforms, instances spin up and down as needed to accommodate changing workloads. If increased activity incurs more requests, GCF creates a new instance for each new request.

The Cloud Functions platform enables teams to craft functional, extensible applications that run code when triggered by preset conditions or events. The platform automatically manages all the computing resources required in those processes, freeing up DevOps teams to focus on developing and delivering features and functions. GCF also enables teams to run custom-written code to connect multiple services in Node, Python, Go, Java, .NET, Ruby, and PHP. These functions can connect with supported cloud databases, such as Cloud SQL and Bigtable.

GCF use cases

Cloud Functions are ideal for creating backends, making integrations, completing processing tasks, and performing analysis. GCF leverages webhooks, which facilitates connections to third-party services. Your functions can act as intermediaries between these hooks and APIs to enable automatic task completion. You might do this for payment alerts, GitHub commits, or in response to text alerts.

On the processing side, GCF functions can interface with Google’s own AI/ML technologies to inspect video and image content. Functions integrate with APIs (such as the Video Intelligence API) to make this possible, forming a processing chain that eventually commits data to cloud storage.

GCF also has relevance in IoT and file processing tasks. Overall, these functions excel in scenarios where data enrichment and logic application are paramount.

Finally, it can help perform maintenance tasks or even offload on-device tasks to the cloud to conserve processing power.

When not to use GCF

Like other FaaS platforms, GCF is not ideal for every situation. The platform is a standout when performing tasks aligned with Google’s suggested use cases — or when it makes clear business sense — but some situations may match better with other compute approaches.

For example, multi-function applications that are memory intensive may not be ideal for GCF if they scale aggressively. As with all FaaS offerings, maximum memory allocations are limited and can drive up costs for memory-intensive compute functions.

As with other FaaS platforms, GCF may not be ideal for infrequent, time-sensitive tasks. When a container cold starts — spins up for the first time to complete a new request — there is a slight delay in normal response time. At scale, these small delays can add up to precious seconds, which could impact end users and business outcomes. Workloads like these may be better served using a VM-based platform like GCE, or a specialized framework.

Benefits of GCF

The upsides of GCF are multidimensional. The service is relatively inexpensive, and pricing is highly flexible since it’s tied to your accumulated usage.

If you’re looking to create light, high-performing functions without worrying about administrative obligations, then GCF is a good choice.

GCF enables also users to create custom runtimes using Docker images — a powerful, popular, and well-documented vendor integral to other containerized deployments.

Cloud Functions is known to be fast processing individual requests. Cloud Functions also supports HTTP by default, while providing general hosting that’s not dependent on the usage plan.

Finally, adjacent tools such as Cloud Debugger and Cloud Trace enable observability and diagnosis when coding issues arise.

Observability and monitoring challenges with Google Cloud Functions

To manage apps on the platform, the Google Cloud operations suite includes a set of utilities for writing and reviewing logs, reporting errors, and viewing monitored metrics. These utilities provide important telemetry to help you understand what is happening within the Google Cloud ecosystem and the immediate services it touches.

However, at scale and in multicloud environments, relying on these utilities alone makes it difficult to get end-to-end observability into all the apps and services interacting across the full cloud stack. Also, these utilities often don’t provide adequate contextual information to resolve a problem or quickly pinpoint a root cause. Because each function is its own independent unit, logging just from the application provides only a single dimension of information, which teams have to correlate with related data from other applications, services, and infrastructure.

This limited visibility makes it difficult to follow distributed traces, which include start-to-finish records of all the events that occur along the path of a given request inside and outside the GCP ecosystem. distributed tracing enables teams to map and understand dependencies throughout their multicloud stack, and is critical for evaluating performance, pinpointing root causes, and prioritizing responses before issues impact users.

How to get the most of GCF without sacrificing observability

Like other FaaS providers, Google Cloud Functions offers function and flexibility that makes it easy to run code in the cloud without provisioning any compute infrastructure. The factors that make it flexible also make observability and monitoring a challenge, especially in multicloud environments.

To get the most out of Google Cloud Functions and the systems they interact with, teams need end-to-end observability that uses automation and AI-assistance to extend beyond the metrics and logs available from the Google Cloud operations suite to include distributed tracing. To effectively monitor modern multicloud environments and understand the complete context of all transactions, teams need the ability to monitor their GCF data along with on-premises resources, containerization platforms, open-source technologies, and end-user data.

Dynatrace provides complete end-to-end observability of all the telemetry data (traces, metrics and logs) generated by your Cloud Functions and the services they interact with, including Google Cloud SQL, Data Store, Load Balancing, and APIs, among others. Automatic service flows and distributed tracing enable teams to instantly see and easily understand application transactions end to end by looking at the full sequence of service calls made when a function was triggered. Using continuous auto-discovery, the Davis® AI engine alerts you when telemetry data diverges from baselines. This means you can quickly understand the impact of an issue with any part of your multicloud stack and prevent it from proliferating and potentially impacting your business.

Insights from Dynatrace about your Google Cloud Functions in context with your full multicloud stack also provide benefits for teams across the organization. Automatic and intelligent observability from Dynatrace helps DevOps teams manage CI/CD workflows and SRE teams to automate responses. Dynatrace can prioritize issues identified from RUM data, and show teams in real-time how every process affects the end-user experience and business outcomes.

Curious to learn more?

Join us for the on-demand Power Demo: Google Cloud Observability for Ops, Apps and Biz Teams.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum