What is a telemetry pipeline?

A telemetry pipeline involves the collection and routing of telemetry data from applications, servers, databases, and more. Additionally, it supports the enrichment and transformation of this telemetry data from a source to its destination.

What is telemetry data?

Telemetry refers to the data used for observability, monitoring metrics such as downtime, connectivity issues, errors, and more. Telemetry data comes in three main forms: metrics, logs, and traces. Telemetry data shows organizations how their applications are performing in real time, enabling them to conduct root-cause analysis on issues and investigate and remediate security vulnerabilities.

Additionally, telemetry data can show organizations how users interact with an application or service, as well as how applications or systems interact with each other.

How does a telemetry pipeline work?

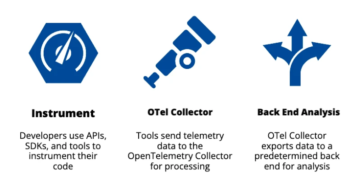

Ultimately, a telemetry pipeline is designed to guide data from a source to a destination, prepare it for analysis, and enable data-driven decision making. There are three main phases of a telemetry pipeline workflow: data collection, processing, and routing.

Initially, the telemetry pipeline collects data from multiple sources, ranging from simple applications and devices to databases and complex multicloud environments. Once the data has been collected, the pipeline processes the data in preparation for analysis. This can include data cleansing and aggregation in order to simplify the data and provide context. Finally, the telemetry pipeline sends the data to its ultimate destination.

Taking control of data at ingest with a single pipeline

Dynatrace OpenPipeline provides a single pipeline for organizations to manage large-scale data ingestion into the Dynatrace unified observability and security platform. OpenPipeline offers businesses visibility into the data they’re ingesting, as well as complete control over that data, while still maintaining the data’s context.

For more information on how Dynatrace OpenPipeline can help your organization take full control over your observability, security, and business data pipelines, visit our website.

Keep reading

Blog postManage data pipelines

Blog postManage data pipelines

Easily create and configure data routes and routing at scale using an OpenPipeline Configuration app that leverages DQL for matching and processing routes. BlogDynatrace simplifies OpenTelemetry metric collection for context-aware AI analytics

BlogDynatrace simplifies OpenTelemetry metric collection for context-aware AI analytics

Discover unknown unknowns using exploratory analytics for OpenTelemetry data. Blog postWhat is OpenTelemetry? An open-source standard for logs, metrics, and traces

Blog postWhat is OpenTelemetry? An open-source standard for logs, metrics, and traces