What is log analysis?

Log analysis is the process of examining computer-generated records known as logs. Software applications, servers, network devices, and other IT infrastructure automatically produce these logs. They contain detailed information about system operations, user activities, error messages, and access requests. Log analysis is a key process in the discipline of log analytics.

The primary goal of log analysis is to extract key data and metrics from these vast volumes of data that can result in actionable insights. By using precise log analysis techniques, organizations can identify trends, uncover anomalies, diagnose issues, and improve overall system performance.

Log analysis vs. log analytics

Log analysis and log analytics are closely related. Both are crucial for monitoring and optimizing system performance but serve different functions and purposes.

Log analysis

Log analysis refers to the manual or automated process of reviewing log files generated by operating systems, software applications, or other digital methods. This involves:

- Purpose: Primarily used for troubleshooting specific issues within a system. Log analysis helps identify patterns and anomalies that could indicate errors or security threats.

- Approach: Often involves a more manual inspection or the use of basic scripts and tools to sift through log data.

- Outcome: The focus is on understanding past events to solve current problems. It's reactive in nature, dealing with issues as they arise.

Log analytics

Log analytics, on the other hand, is a more comprehensive approach that leverages advanced data analysis techniques to derive insights from log data. It involves:

- Purpose: While it can be used for troubleshooting, log analytics extends to improve system performance, predict future issues, and enhance decision-making.

- Approach: Utilizes sophisticated tools and platforms capable of processing large volumes of log data in real-time or near-real-time. These platforms often incorporate machine learning algorithms and visualization capabilities.

- Outcome: Provides predictive insights and helps in strategic planning. It's proactive, aiming to prevent issues before they occur and continually optimizing operations.

Key differences

- Scope:

- Log analysis is often limited to specific incidents or time frames.

- Log analytics looks at broader trends and long-term data patterns.

- Tools:

- Log analysis might use simple grep commands or basic log review tools.

- Log analytics employs platforms that offer advanced analytical features.

- Goals:

- Log analysis focuses on diagnosing and resolving immediate issues.

- Log analytics aims to enhance system efficiency and security through insights-driven actions.

In essence, while log analysis and log analytics both deal with log data, the critical difference lies in their scope and approach. Log analysis is about response and resolution, whereas log analytics is about prediction and optimization. Understanding these differences can help organizations choose the right strategy and tools to effectively manage their system's log data.

What are some use cases for log analysis?

What data and metrics you gather as part of log analysis depends on the use cases you need to address as part of your larger log analytics goals.

1. Security monitoring and incident response

One of the most critical applications of log analysis is cybersecurity. Logs can reveal unusual patterns that may indicate security breaches or unauthorized access attempts. By continuously monitoring logs, IT teams can quickly detect and respond to potential threats, minimizing damage and ensuring data integrity.

2. Performance optimization

Logs provide information about system performance, including response times, transaction volumes, and resource utilization. By analyzing these metrics, IT professionals can identify bottlenecks, optimize resource allocation, and enhance overall system efficiency. This is particularly valuable for businesses that rely on high-availability applications and services.

3. Troubleshooting and issue resolution

When a system experiences technical issues or outages, log analysis becomes an essential tool for diagnosing and resolving problems. Logs can pinpoint the root cause of an issue, whether it's a software bug, hardware failure, or configuration error. This accelerates the troubleshooting process, reducing downtime and minimizing disruptions.

4. Compliance and audit

Many industries are subject to strict regulatory requirements that mandate the logging and auditing of specific activities. Log analysis is crucial to ensure compliance by providing a clear record of user actions, data access, and system changes. This not only helps meet regulatory obligations but also demonstrates transparency and accountability.

5. Capacity planning

By analyzing historical log data, organizations can forecast future system needs and plan for capacity expansion. This proactive approach prevents performance degradation and ensures that IT infrastructure can handle growing workloads without interruptions.

What are the challenges of log analysis?

Gathering the key data and metrics needed for effective log analysis has always been challenging, requiring that analysts have a deep understanding of the systems they’re monitoring and the use cases they need to analyze. But the increasingly distributed nature of hybrid and cloud computing environments has made log analysis exponentially more complex.

1. Volume and variety of data

Modern IT environments generate an overwhelming amount of log data, often in diverse formats. Such vast volumes can strain storage resources and make efficient analysis more complex.

2. Real-time processing

Timely insights are crucial for incident response and decision-making. Analyzing logs in real time requires robust tools and infrastructure capable of processing data rapidly without sacrificing accuracy.

3. Data noise

Not all log entries are equally relevant. Distinguishing between meaningful insights and irrelevant noise can be challenging, especially when dealing with large datasets. Intelligent filtering techniques are essential to focus on what truly matters.

4. Security and privacy concerns

Logs often contain sensitive information, making them attractive targets for cyberattacks. Ensuring the security and privacy of log data is critical, requiring encryption, access controls, and compliance with data protection regulations.

5. Integration and correlation

Organizations typically have multiple systems generating logs, each with its format and structure. Integrating and correlating these logs to provide a unified view of the IT environment can be complex and time-consuming.

Streamlined, intelligent log analytics for faster incident response and better security

Real-time insights from log data are essential in today's complex digital ecosystems, where applications, infrastructures, and user expectations constantly evolve. Dynatrace offers a unified observability approach to log analysis and log analytics designed to give organizations powerful, context-aware insights into their data.

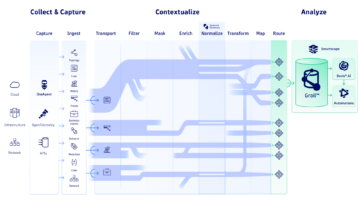

The Dynatrace observability platform is built on three core pillars: **OpenPipeline**, **Davis® AI**, and **Grail**, which integrate data insights from to bring unique capabilities to the table:

1. OpenPipeline

- Ingests data from any source, at any scale, and in any format, including Dynatrace OneAgent®, open source collectors such as OpenTelemetry, or other third-party tools.

- Enriches ingested logs with IT context, such as hostname, process name, log level, public cloud region, Kubernetes cluster name, container name, Kubernetes deployment name, code language, trace ID, and more.

- Converts logs into actionable metrics or business events for seamless integration with dashboards and analytics tools.

- Parses common log formats like JSON during ingestion, simplifying data preparation and ensuring streamlined log analysis.

2. Grail

- The Dynatrace observability data lakehouse that centralizes log data to solve all analytics tasks at scale.

- Indexless, schema-on-read architecture that requires no re-hydration or hot/cold storage, so you don’t have to determine your log data use cases before ingesting data.

- Supports deep data analysis with the Dynatrace Query Language for real-time, flexible querying, enabling comprehensive log exploration and threat hunting.

3. Davis® AI

- The Dynatrace AI engine that combines three types of AI to automatically establish performance baselines among entities found in Grail and detect anomalies against these baselines. This enables customers to detect performance issues early.

- Uses causal AI for automated root cause analysis by examining observability signals across logs, traces, metrics, and events.

- Empowers users with generative AI for log analytics, supporting natural language queries through the Dynatrace Query Language.

- Provides predictive AI insights for early trend detection, allowing proactive responses to potential issues before they affect customers.

The Dynatrace approach to log analysis accelerates digital transformation, providing real-time insights that drive rapid issue resolution, optimize performance, and proactively enhance the customer experience.