Dynatrace Blog

Modern cloud done right. Innovate faster and compete more effectively in the digital age.

Announcing General Availability of Davis CoPilot: Your new AI assistant

Simplicity meets power: Introducing the all-new Dynatrace Logs app

Dynatrace ranked as top innovative company in Austria for 2025 by Statista and trend.

Dynatrace joins the Microsoft Intelligent Security Association

Microsoft Ignite 2024 guide: Cloud observability for AI transformation

5 considerations when deciding on an enterprise-wide observability strategy

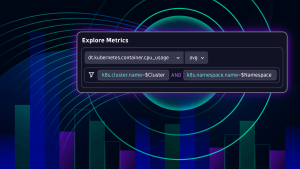

Kubernetes service-level objectives: Optimize resource utilization of Kubernetes clusters with SLOs

OneAgent release notes version 1.303

Analyze query performance: The next level of database performance optimization

OpenPipeline: Simplify access to critical business data

OneAgent release notes version 1.301

Dynatrace SaaS release notes version 1.304

Reliability indicators that matter to your business: SLOs for all data types

OpenTelemetry histograms reveal patterns, outliers, and trends

Dynatrace named a Leader in inaugural 2024 Gartner® Magic Quadrant™ for Digital Experience Monitoring

Helping customers unlock the Power of Possible

Dynatrace achieves SOC 1 Type II certification

Demo: Transform OpenTelemetry data into actionable insights with the Dynatrace Distributed Tracing app

Dynatrace on Microsoft Azure in Australia enables regional customers to leverage AI-powered observability

New Distributed Tracing app provides effortless trace insights

How executives reveal breakthrough insights into customer experiences with Dynatrace to accelerate business growth

Dynatrace SaaS release notes version 1.303

Supercharge your end-to-end infrastructure and operations observability experience

Transform data into insights with Dynatrace Dashboards and Notebooks

Dynatrace named Cloud Security Platform of the Year in the 2024 CyberSecurity Breakthrough Awards