Employing Dynamic Architecture Validation

Chapter: Performance Engineering

A relatively new methodology in the area of performance engineering is dynamic architecture validation. In contrast to static validation, it checks the runtime behavior of the application for potential problem patterns. By identifying common architectural implementation errors, many potential performance issues can be caught and fixed early in the development process, including excessive database retrievals or making too many remote service calls, which can introduce architectural problems like latency bottlenecks.

Dynamic architecture validation allows testing an application before it has been fully implemented, as we can analyze the dynamic behavior of individual components that can be unit-tested. It also makes it easy to identify architectural regressions that have been introduced with code changes by comparing the dynamic behavior between test runs.

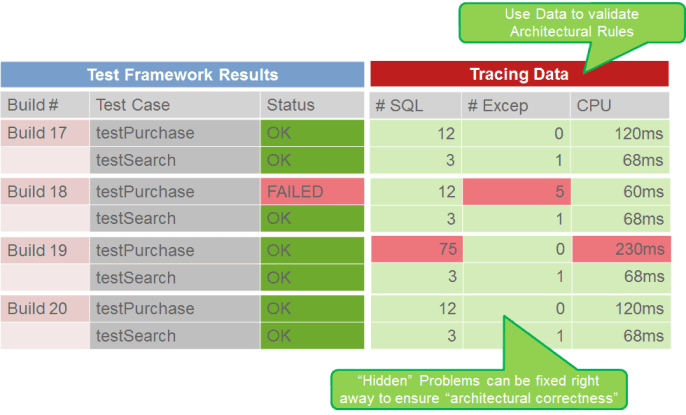

Figure 3.4 shows what we can achieve by validating rules such as "You should not use more than 10 SQL statements." Even though a functional regression was identified with our testing framework in Build 18 and fixed in Build 19, an architectural regression was introduced that caused many more SQL statements than before to be executed. These hidden problems can be identified by looking at metrics per test case, leading to functionally and architecturally correct code. This raises code quality, ensures architectural correctness, and ultimately results in a better-performing and -scaling application.

We've mentioned the number of database statements as one of the common problem areas or problem patterns that can be identified through dynamic architectural validation. Let's now expand on this and discuss how to identify these and other common patterns.

Identifying Common Problem Patterns

The goal of dynamic architectural validation is to recognize potential trouble spots in the application runtime behavior that can lead to performance and scalability problems. For instance, executing 20 SQL statements to perform a login that could be achieved in a single SQL statement is an obvious problem. It might not be a problem on the developer's workstation, as he is the only one accessing the system. But under heavy load with thousands of users, a login requiring 20 times as many SQL statements will definitely stress the database unnecessarily.

The possible problems can be divided into two categories:

- Problems in runtime behavior are recognized due to characteristics in the process logic. An example is the N+1 query problem in Hibernate. One must recognize in this case that identical queries are carried out multiple times with different parameters. A web application causing a high number of remote calls or faulty caching would be another example.

- In contrast, other architecture problems require additional information for analysis. It takes a responsible architect to determine the necessary parameters and overall test conditions. Possible examples include transferring only limited data volume or avoiding synchronous remote calls (recognizable via the called method).

The responsible software architect(s) must define a solid set of architectural rules that fall into the two categories, such as these:

- Setting a limit on the total number of database queries

- Disallowing the same database query to be executed multiple times for the same operation

Practical methods for checking these rules depend to a large degree on the tools used, as well as on the degree of automation supported by these tools. Tracing or diagnostic tools that show the execution path in chronological order tend to be better for enforcing rules as complete information, such as number of methods executed, is captured.

Logging mechanisms that can log very detailed information about the execution path can also be used to validate certain rules. Here it depends on the granularity of log information available. When focusing on database activity, a logging framework that can generate log output for each database-query execution is often sufficient. This may require special configuration or custom extension of the logging framework to produce the required output.

You've learned that the rules for dynamic architecture validations are defined by the software architect. It is also the architect who makes sure that these rules are well understood by all developers in the form of mentoring or training, as well as during code reviews. This ensures that code is written with rules that lead to higher-quality code in mind from the start. The next step is to automate the validation of these rules instead of manually looking at measures and validating them against the rules. Let's discuss how that works.

Automating Performance Validation

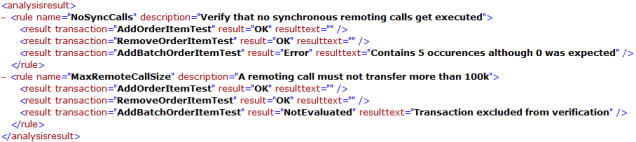

Performance and architectural-rule validation can be automated and integrated into the build process using one of several tools available for the task. There are both open-source and commercial solutions, and capabilities vary over a range of ease of use, degree of automation, and level of rule-definition flexibility. For the purpose of showing how this works, we will look at Dynatrace and its capabilities. Figure 3.5 shows a results file produced by Dynatrace. A set of architectural rules was validated against trace and diagnostics data gathered for a set of executed unit tests. The listing indicates which tests passed and failed according to the rules specified by the architect.

Even if you choose to analyze runtime behavior using a debugger or by examining the corresponding log output, you'll have a better understanding of the application's behavior. The more complex the application, the more important it is to run these analytical tests.

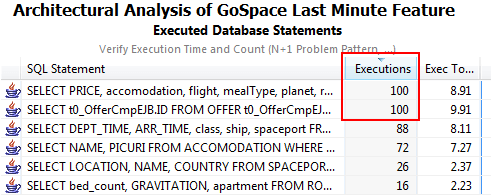

Figure 3.6 shows another bit of sample output from a Dynatrace analysis of database calls for a test transaction. The report makes it obvious that some of the queries have been executed hundreds of times for a single operation. This clearly violates one of our architectural rules.

It's difficult for application developers to understand the detailed behavior of all the components they are using, which means that inevitably, undesirable side effects will turn up during load testing. The cycle of test, fix, and retest can be quite time-consuming if you wait until the end of the project lifecycle. In my own experience, a large number of the problems revealed during load testing or in production could have been detected and avoided during development.

It's also important to realize that the benefits of dynamic architecture validation are not limited to the development phase. In particular, cache behavior should also be tested using this method, both during load tests and in production.

Table of Contents

Application Performance Concepts

Memory Management

How Java Garbage Collection Works

The Impact of Garbage Collection on application performance

Reducing Garbage Collection Pause time

Making Garbage Collection faster

Not all JVMS are created equal

Analyzing the Performance impact of Memory Utilization and Garbage Collection

The different kinds of Java memory leaks and how to analyze them

High Memory utilization and their root causes

Classloader-releated Memory Issues

Performance Engineering

Virtualization and Cloud Performance

Try it free